Fractal Fract, Free Full-Text

Por um escritor misterioso

Last updated 15 abril 2025

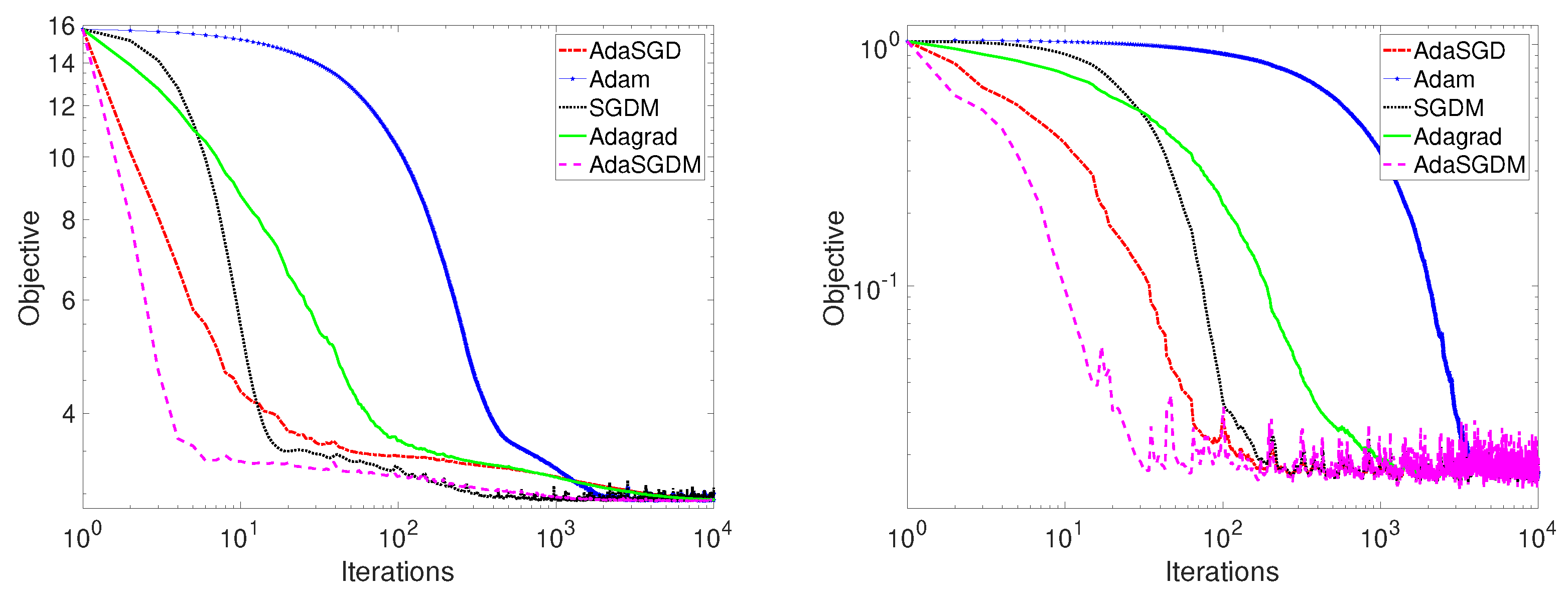

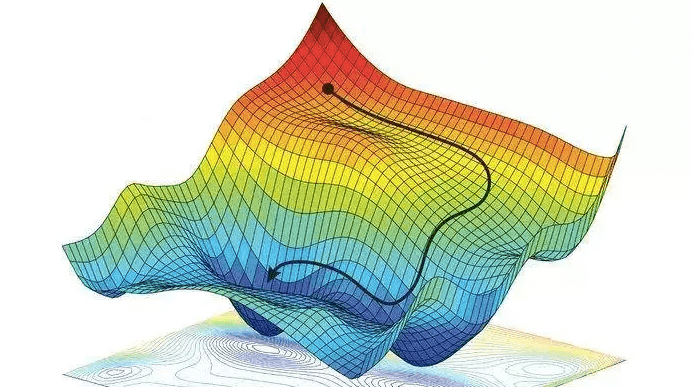

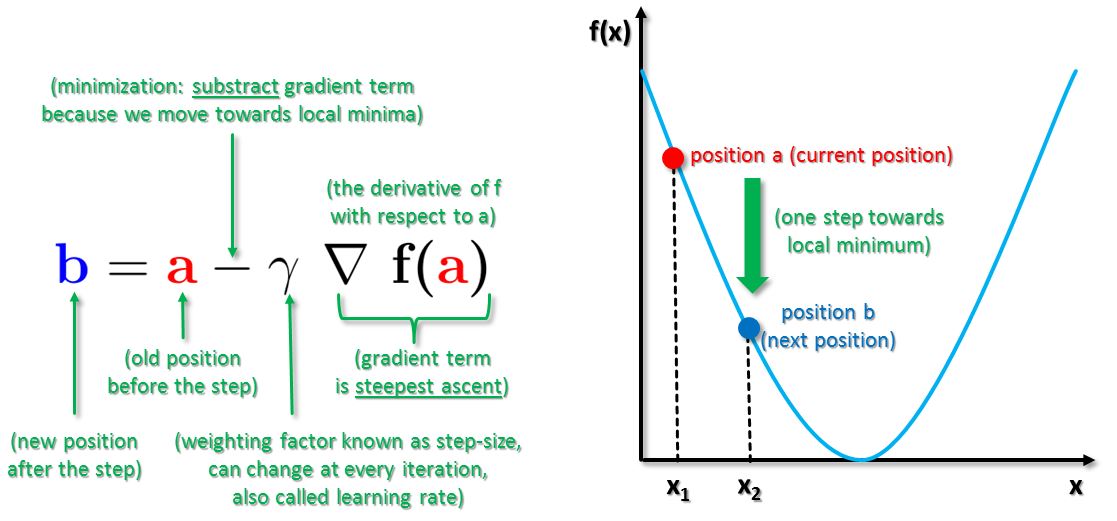

Stochastic gradient descent is the method of choice for solving large-scale optimization problems in machine learning. However, the question of how to effectively select the step-sizes in stochastic gradient descent methods is challenging, and can greatly influence the performance of stochastic gradient descent algorithms. In this paper, we propose a class of faster adaptive gradient descent methods, named AdaSGD, for solving both the convex and non-convex optimization problems. The novelty of this method is that it uses a new adaptive step size that depends on the expectation of the past stochastic gradient and its second moment, which makes it efficient and scalable for big data and high parameter dimensions. We show theoretically that the proposed AdaSGD algorithm has a convergence rate of O(1/T) in both convex and non-convex settings, where T is the maximum number of iterations. In addition, we extend the proposed AdaSGD to the case of momentum and obtain the same convergence rate for AdaSGD with momentum. To illustrate our theoretical results, several numerical experiments for solving problems arising in machine learning are made to verify the promise of the proposed method.

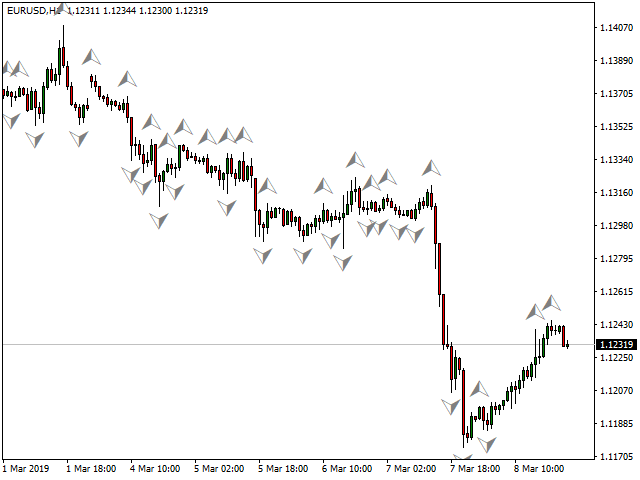

Fractals with Alert for MT4 and MT5

A New Bridge Between the Geometry of Fractals and the Dynamics of Partial Synchronization

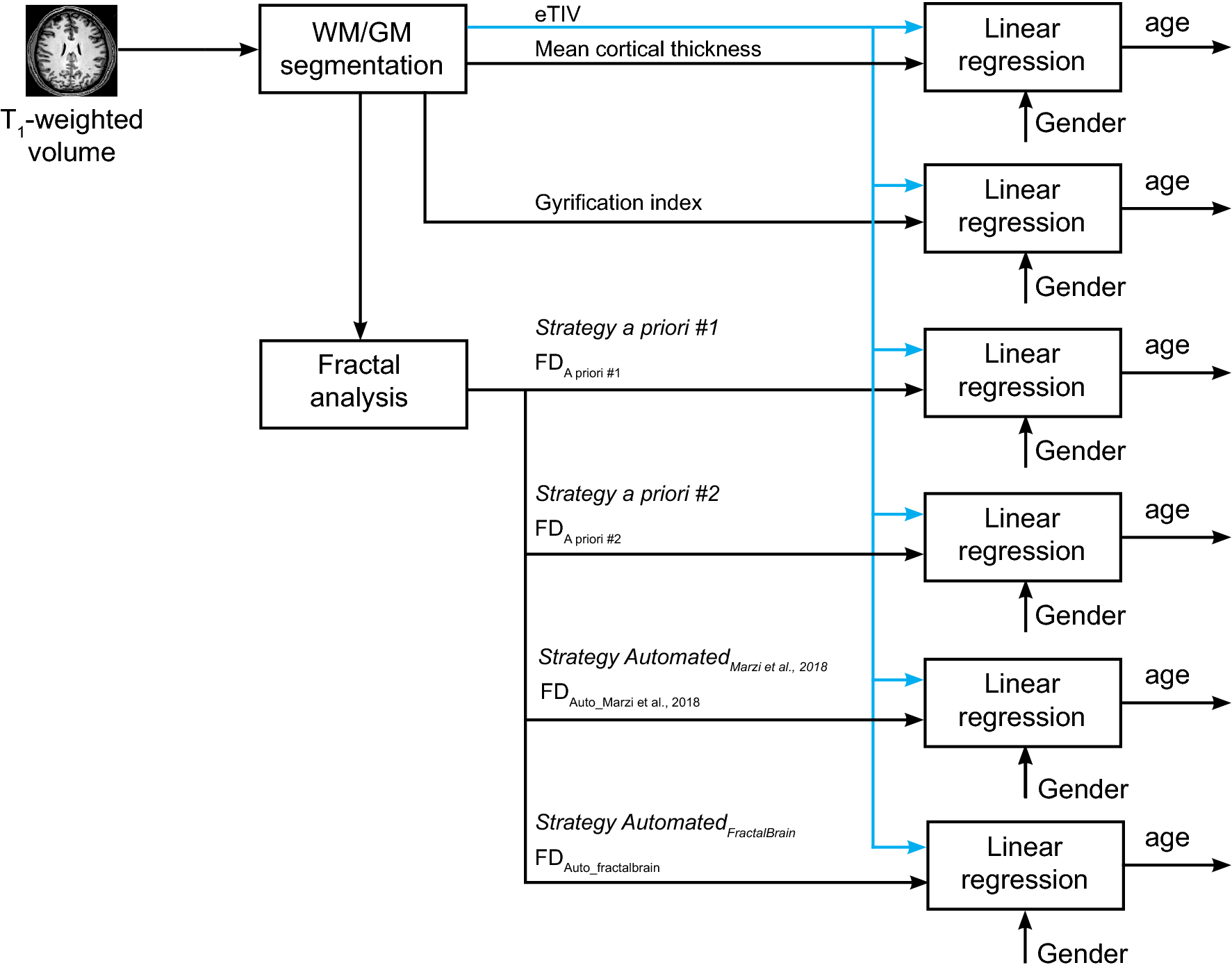

Toward a more reliable characterization of fractal properties of the cerebral cortex of healthy subjects during the lifespan

Rybka 1.0 Get File - Colaboratory

:max_bytes(150000):strip_icc()/dotdash_INV_final_Fractal_Indicator_Definition_and_Applications_Jan_2021-01-38e98076e9264b0c86b2051bdb76df27.jpg)

Fractal Indicator: Definition, What It Signals, and How To Trade

Experimental investigation on coal pore and fracture characteristics based on fractal theory - ScienceDirect

Fractal Pictures [HQ] Download Free Images on Unsplash

The Pattern Inside the Pattern: Fractals, the Hidden Order Beneath Chaos, and the Story of the Refugee Who Revolutionized the Mathematics of Reality – The Marginalian

The Fractal Geometry of Nature: Benoit B. Mandelbrot: 9780716711865: : Books

Fractal Cutout Card – Fractal Foundation

The Pattern Inside the Pattern: Fractals, the Hidden Order Beneath Chaos, and the Story of the Refugee Who Revolutionized the Mathematics of Reality – The Marginalian

Recomendado para você

-

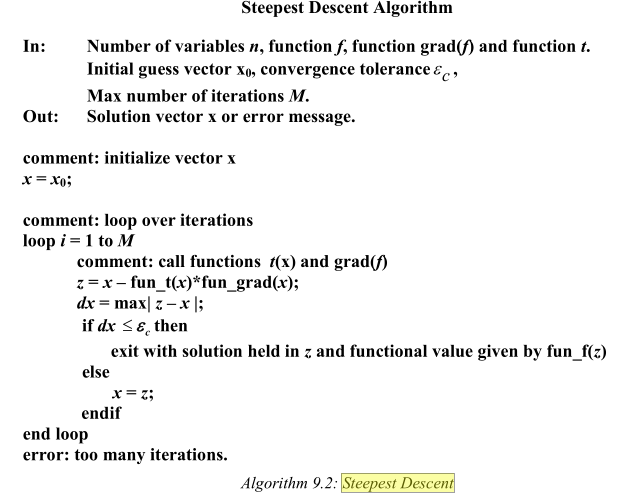

Steepest Descent Method15 abril 2025

Steepest Descent Method15 abril 2025 -

Steepest Descent Method15 abril 2025

Steepest Descent Method15 abril 2025 -

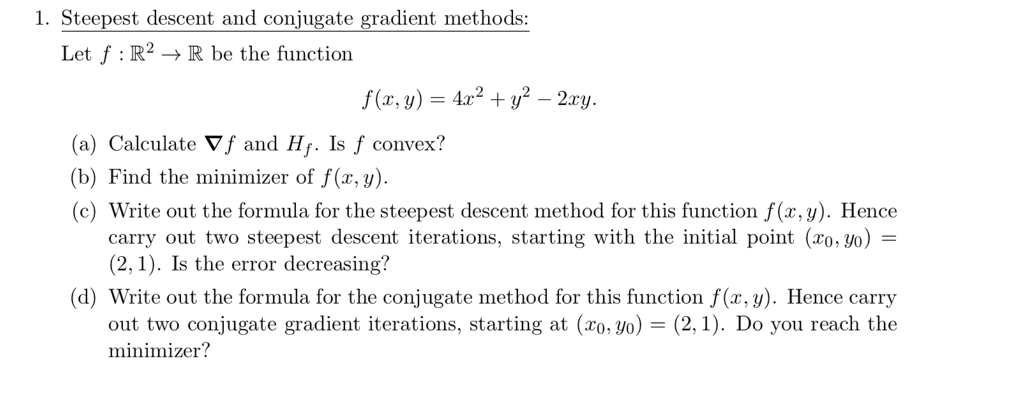

Solved 1. Steepest descent and conjugate gradient methods15 abril 2025

Solved 1. Steepest descent and conjugate gradient methods15 abril 2025 -

Write a MATLAB program for the steepest descent15 abril 2025

Write a MATLAB program for the steepest descent15 abril 2025 -

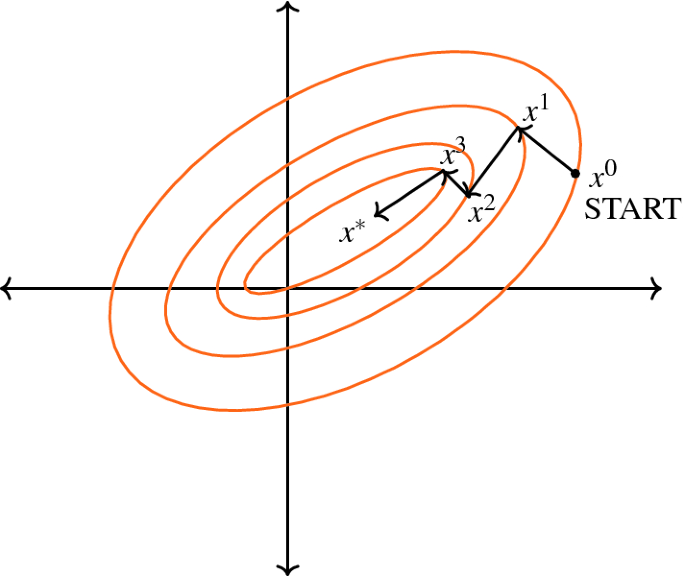

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing15 abril 2025

-

Gradient Descent - Gradient descent - Product Manager's Artificial Intelligence Learning Library15 abril 2025

Gradient Descent - Gradient descent - Product Manager's Artificial Intelligence Learning Library15 abril 2025 -

Gradient Descent Big Data Mining & Machine Learning15 abril 2025

Gradient Descent Big Data Mining & Machine Learning15 abril 2025 -

Steepest Descent - an overview15 abril 2025

Steepest Descent - an overview15 abril 2025 -

![PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning](https://d3i71xaburhd42.cloudfront.net/a0174a41c7d682aeb1d7e7fa1fbd2404e037a638/11-Figure8.1-1.png) PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning15 abril 2025

PDF] Steepest Descent and Conjugate Gradient Methods with Variable Preconditioning15 abril 2025 -

Solved] . 1. Solve the following using steepest descent algorithm. Start15 abril 2025

você pode gostar

-

Can You Have Multiple Playstation Accounts on PS5?, by Techtricks15 abril 2025

Can You Have Multiple Playstation Accounts on PS5?, by Techtricks15 abril 2025 -

Buy cheap Mahjong Classic cd key - lowest price15 abril 2025

Buy cheap Mahjong Classic cd key - lowest price15 abril 2025 -

Subway Surf - 🕹️ Online Game15 abril 2025

Subway Surf - 🕹️ Online Game15 abril 2025 -

PPONE The Promised Neverland Plush,Anime Emma Pillow Ray Plushies Norman Cushion Cute Doll The Promised Neverland Plush,Anime Norman Pillow Ray Plushies Cushion Cute Doll (ray) : Toys & Games15 abril 2025

PPONE The Promised Neverland Plush,Anime Emma Pillow Ray Plushies Norman Cushion Cute Doll The Promised Neverland Plush,Anime Norman Pillow Ray Plushies Cushion Cute Doll (ray) : Toys & Games15 abril 2025 -

Estados Unidos después de Roe15 abril 2025

Estados Unidos después de Roe15 abril 2025 -

Atelier Fabi Palioto - G75 Gabarito de moldes em MDF História na Lata Era uma vez um gato xadrez!15 abril 2025

Atelier Fabi Palioto - G75 Gabarito de moldes em MDF História na Lata Era uma vez um gato xadrez!15 abril 2025 -

TESTER - Montblanc - Legend Spirit - The King of Tester15 abril 2025

TESTER - Montblanc - Legend Spirit - The King of Tester15 abril 2025 -

60 MINUTES of Toph's Best Moments Ever ⛰15 abril 2025

60 MINUTES of Toph's Best Moments Ever ⛰15 abril 2025 -

Aquarius Algorithm Episode 115 abril 2025

-

Paixao Sem Limites15 abril 2025