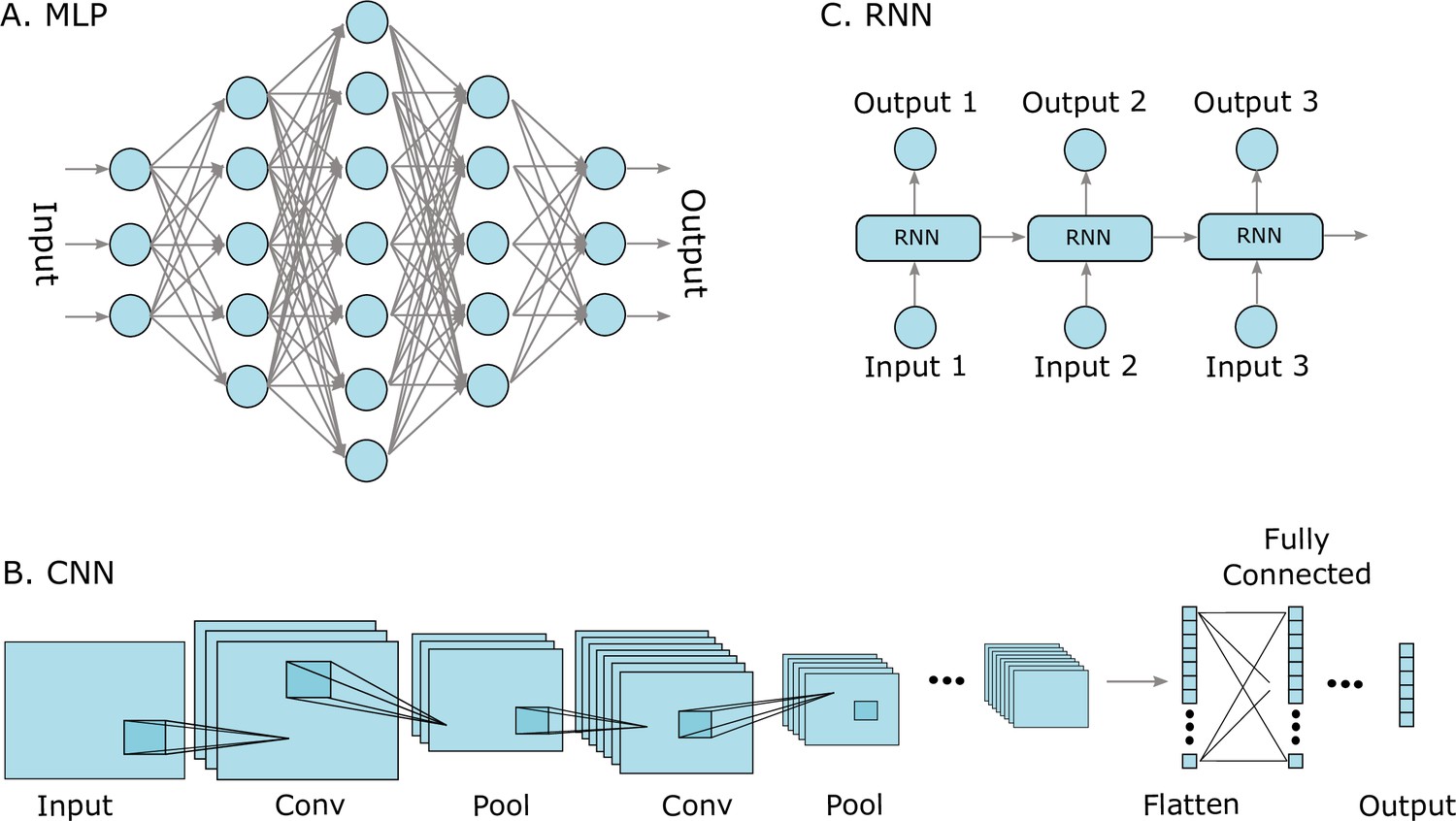

PDF) Incorporating representation learning and multihead attention

Por um escritor misterioso

Last updated 15 abril 2025

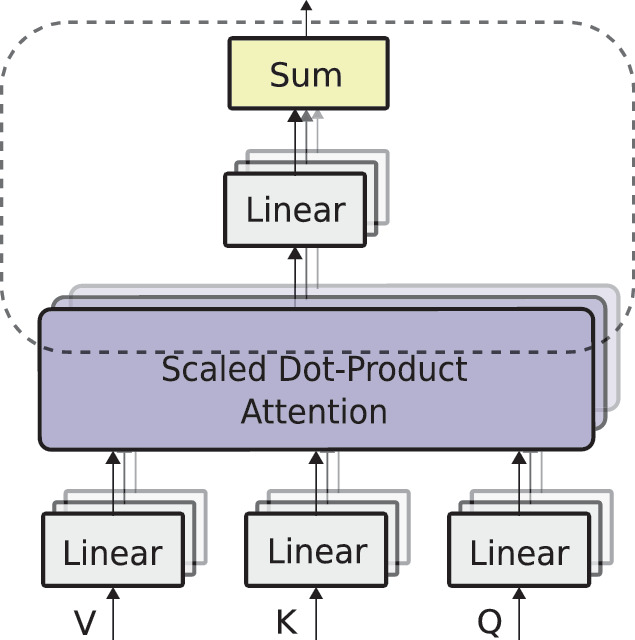

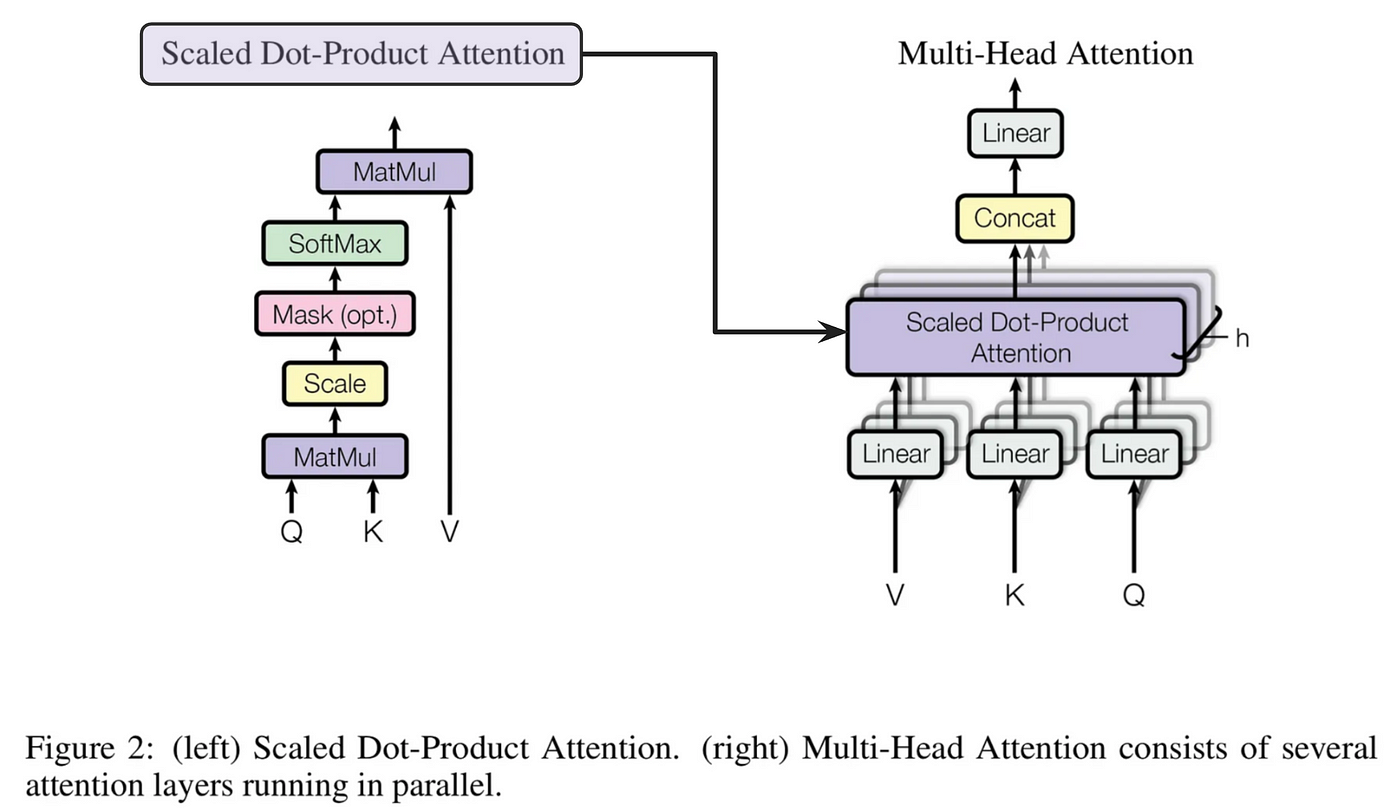

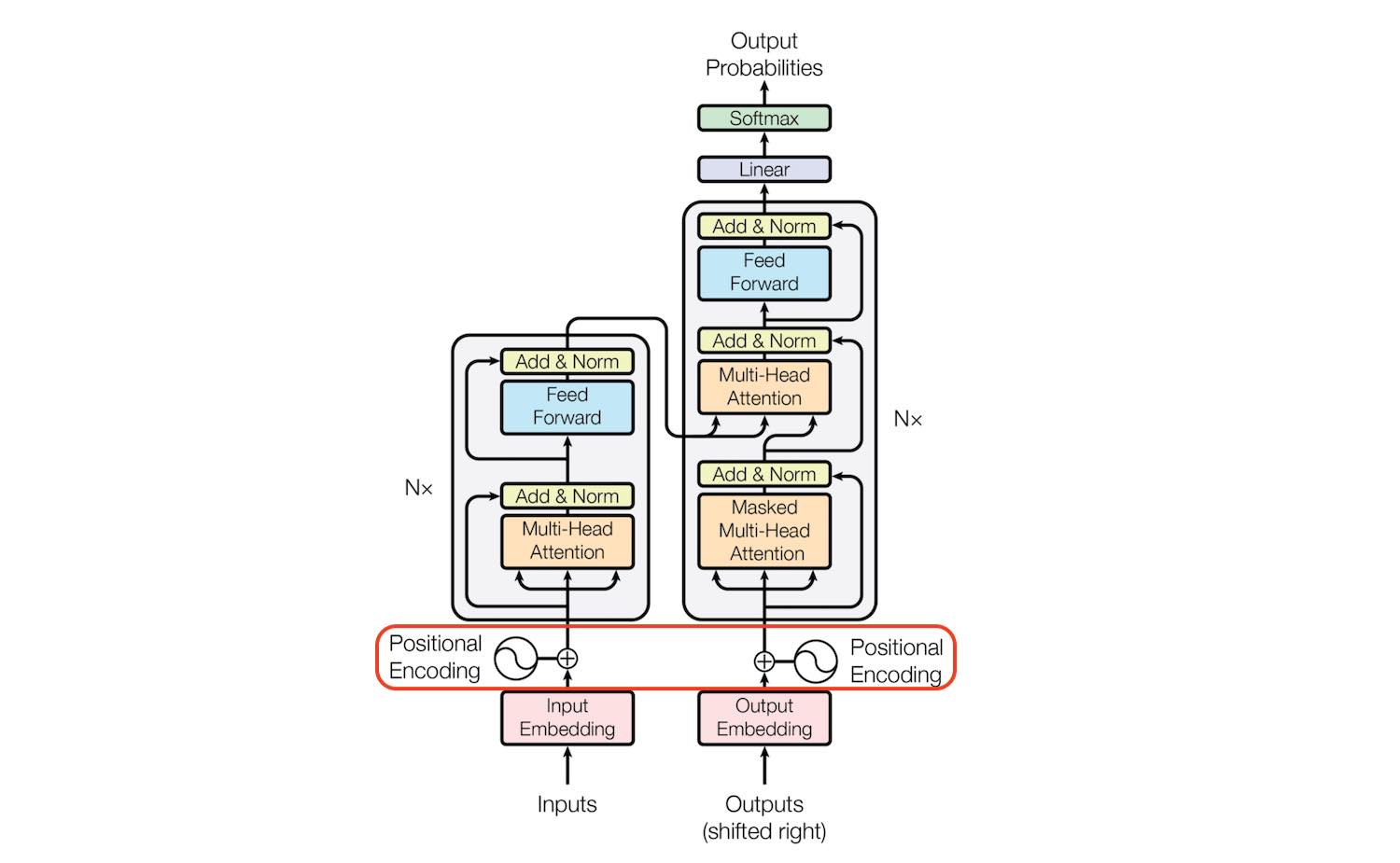

Software and Hardware Fusion Multi-Head Attention

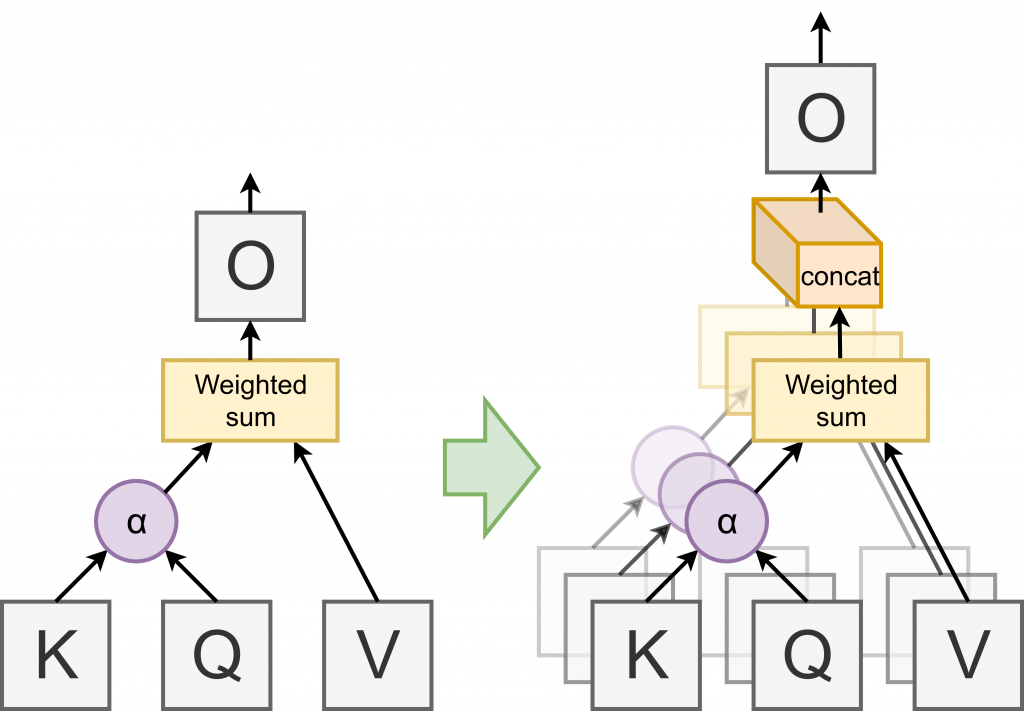

Intuition for Multi-headed Attention., by Ngieng Kianyew

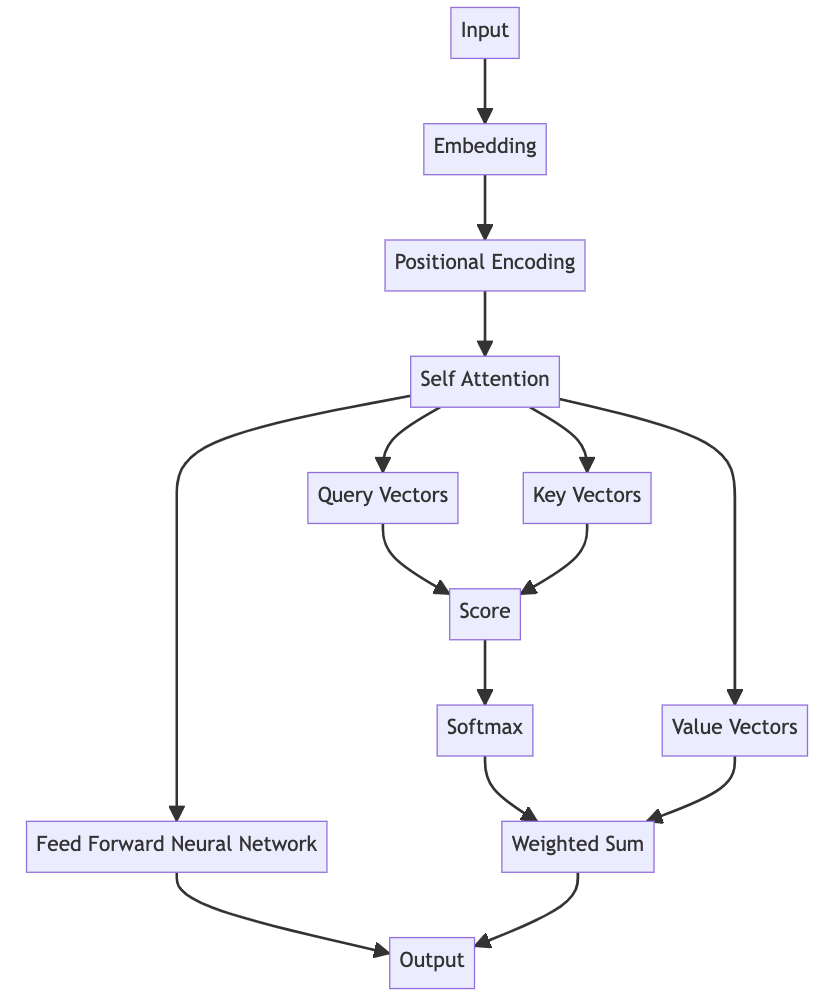

Decoding the Magic of Self-Attention: A Deep Dive into its Intuition and Mechanisms, by Farzad Karami

Transformer Architecture: The Positional Encoding - Amirhossein Kazemnejad's Blog

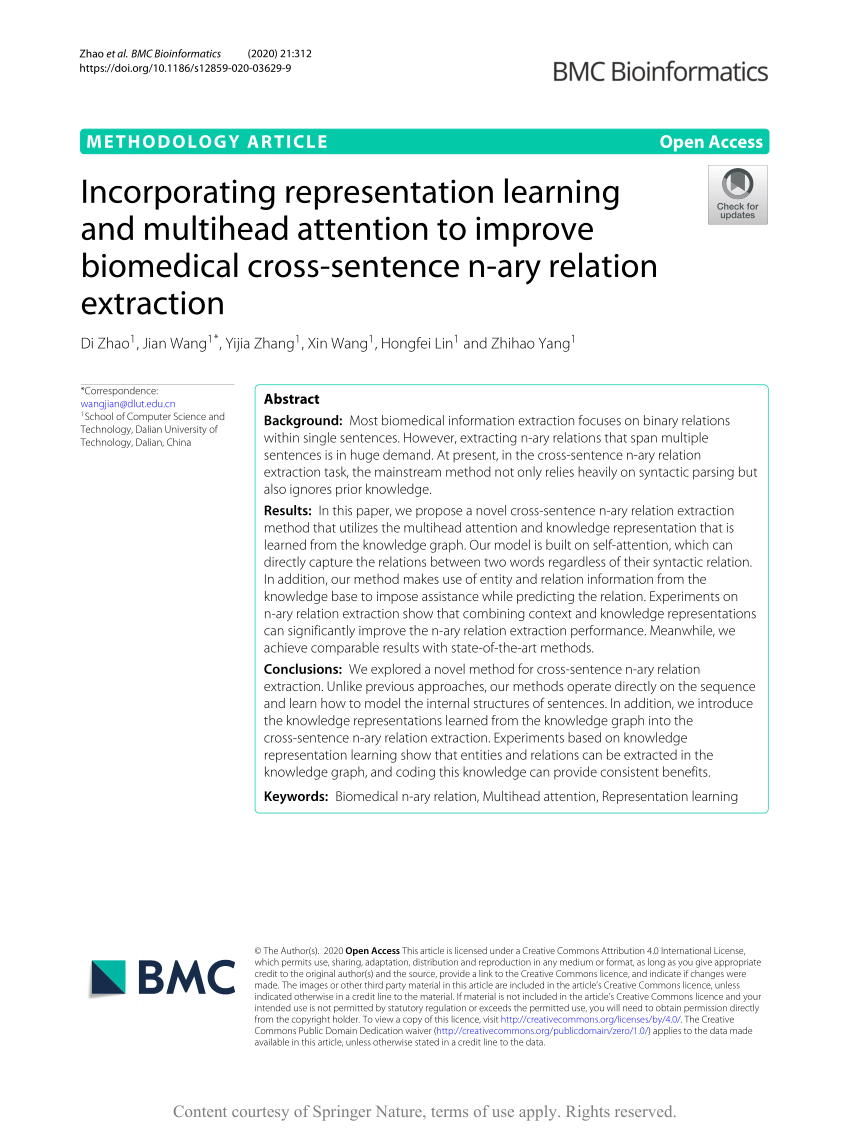

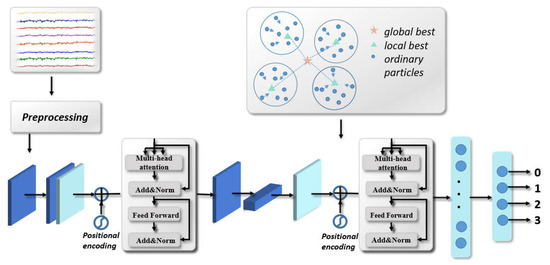

Incorporating representation learning and multihead attention to improve biomedical cross-sentence n-ary relation extraction, BMC Bioinformatics

How to Implement Multi-Head Attention from Scratch in TensorFlow and Keras

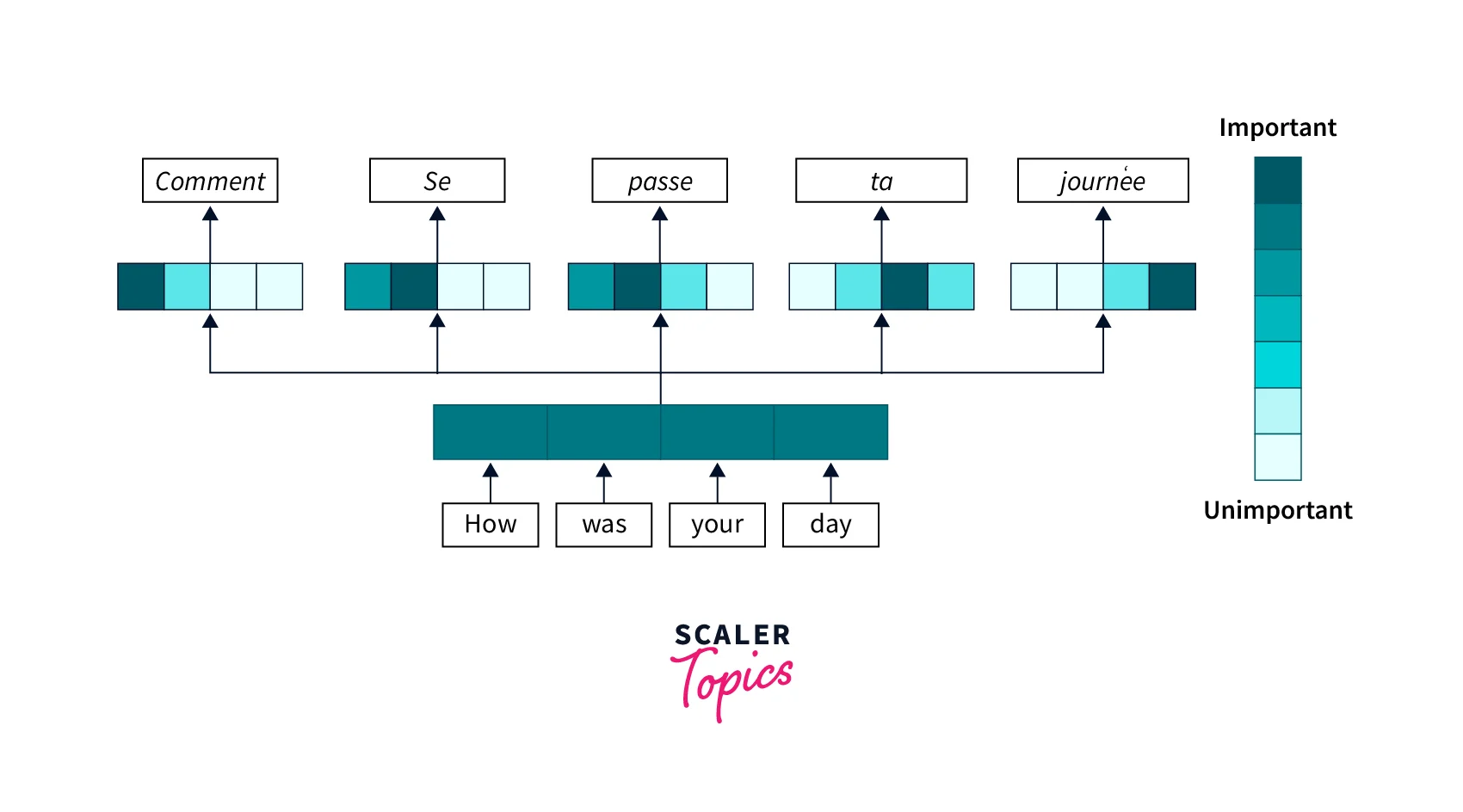

Attention Mechanism in Deep Learning- Scaler Topics

Tutorial 6: Transformers and Multi-Head Attention — UvA DL Notebooks v1.2 documentation

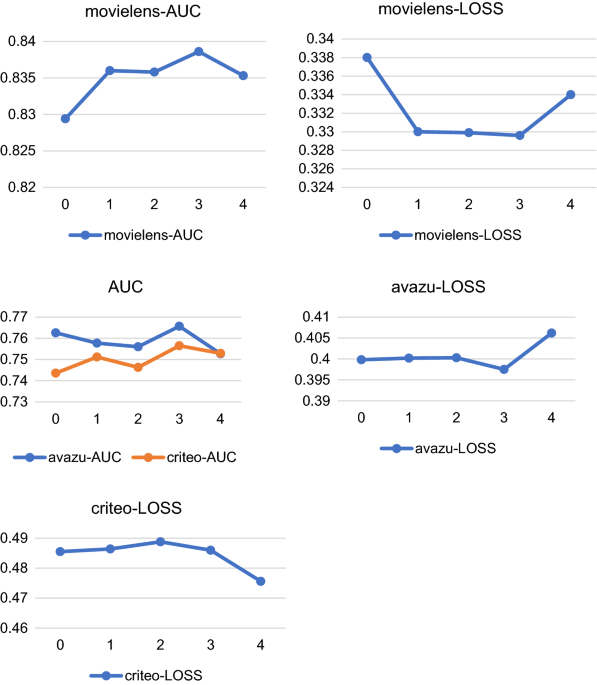

Click-through rate prediction model integrating user interest and multi-head attention mechanism, Journal of Big Data

Transformer-based deep learning for predicting protein properties in the life sciences

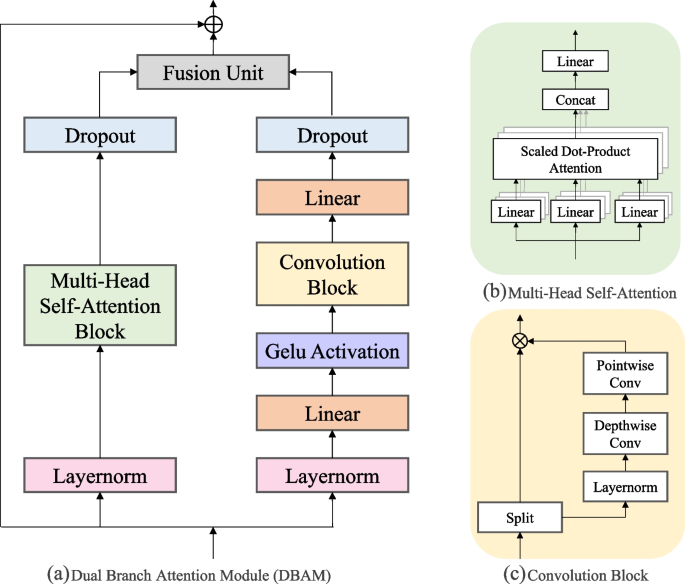

Dual-branch attention module-based network with parameter sharing for joint sound event detection and localization, EURASIP Journal on Audio, Speech, and Music Processing

Bioengineering, Free Full-Text

Are Sixteen Heads Really Better than One? – Machine Learning Blog, ML@CMU

Recomendado para você

-

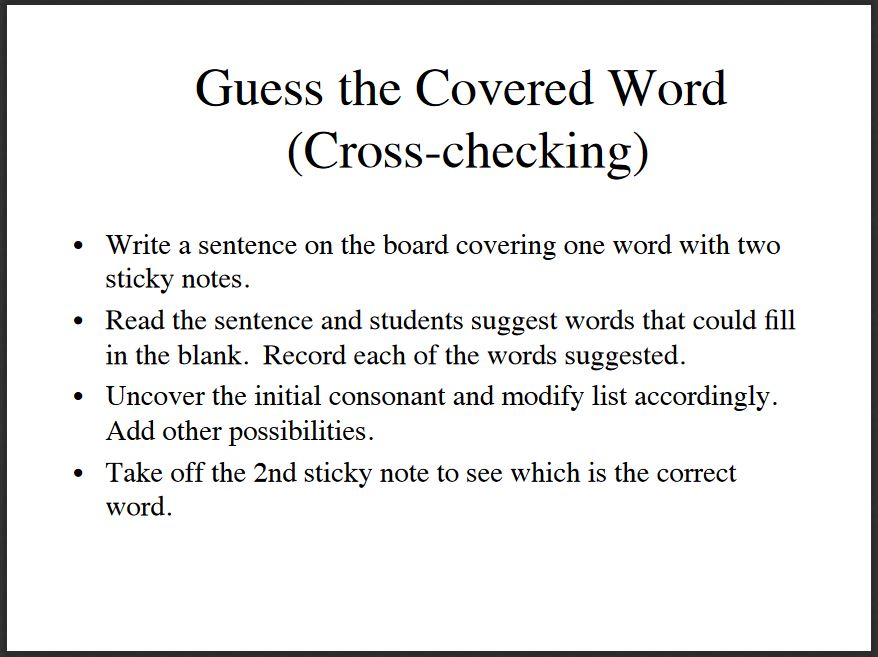

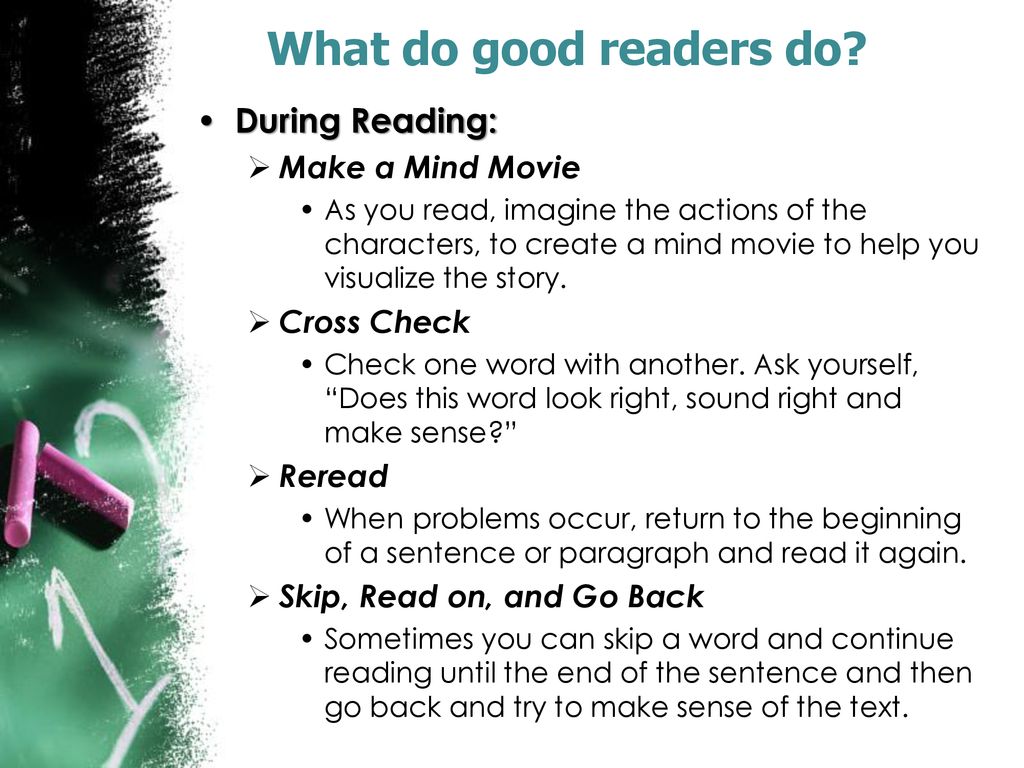

Cross Checking: What it is and why your kids need it15 abril 2025

Cross Checking: What it is and why your kids need it15 abril 2025 -

Word identification and decoding15 abril 2025

Word identification and decoding15 abril 2025 -

15 Minute Comprehension Activities - ppt download15 abril 2025

15 Minute Comprehension Activities - ppt download15 abril 2025 -

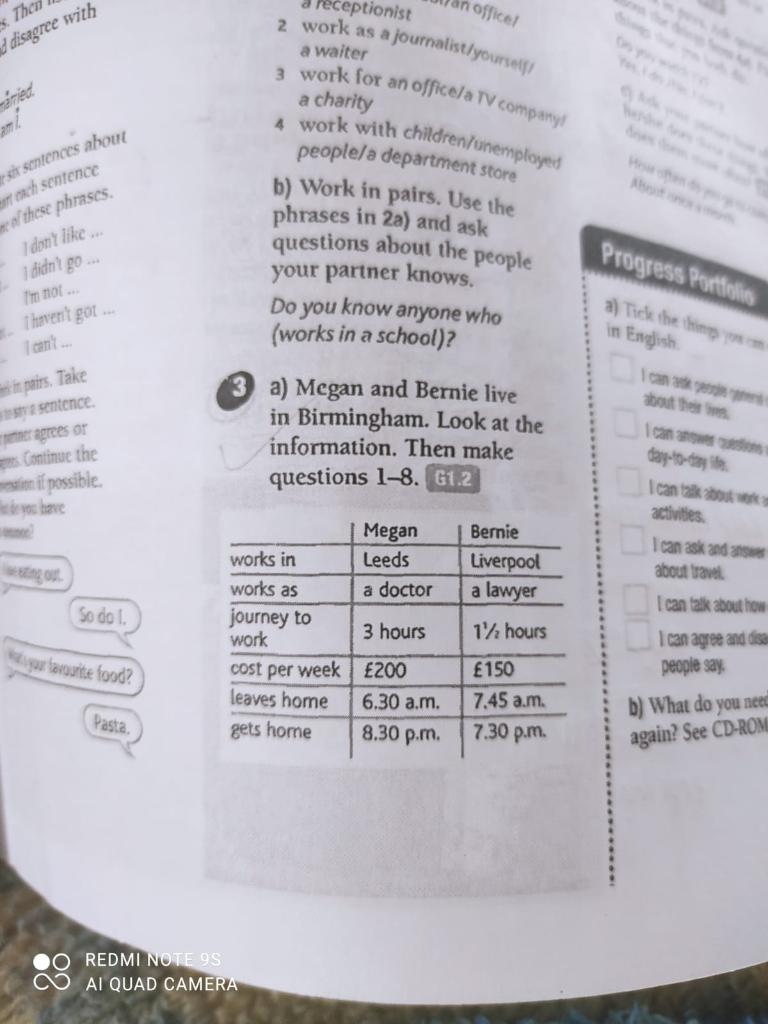

Solved ask and And a 6 2 a) Cross check uk 11.12 Listen and15 abril 2025

-

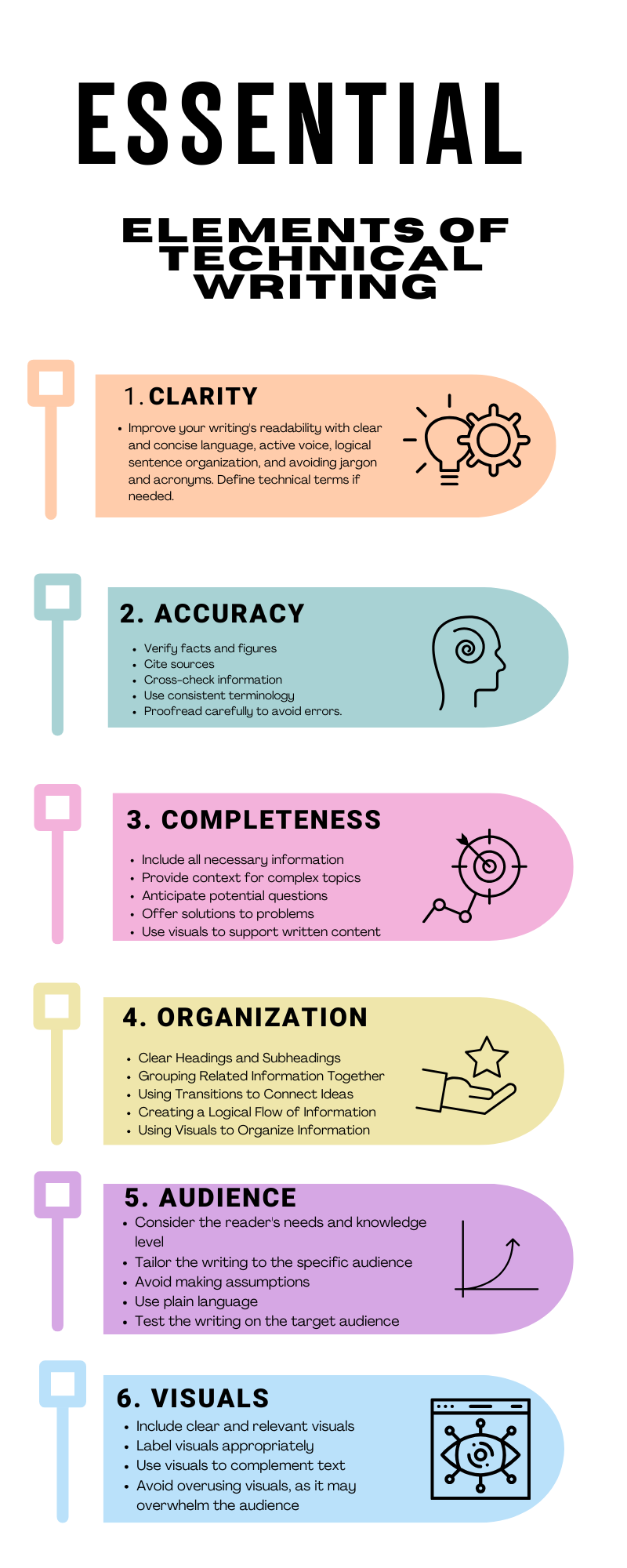

Essential Elements of Technical Writing: A Guide for Technical15 abril 2025

Essential Elements of Technical Writing: A Guide for Technical15 abril 2025 -

please check the answer it is correct • Tick V) the groups of15 abril 2025

please check the answer it is correct • Tick V) the groups of15 abril 2025 -

Total number of sentences and number of check-worthy ones in the15 abril 2025

Total number of sentences and number of check-worthy ones in the15 abril 2025 -

10 Tautology Examples (2023)15 abril 2025

10 Tautology Examples (2023)15 abril 2025 -

Code Switching: Definition, Types and Examples (2023)15 abril 2025

Code Switching: Definition, Types and Examples (2023)15 abril 2025 -

Metacommentary: Definition and Examples (2023)15 abril 2025

Metacommentary: Definition and Examples (2023)15 abril 2025

você pode gostar

-

Motylev, Alexander15 abril 2025

-

Inscríbete a la Beta Zenless Zone Zero, Condiciones y Requisitos15 abril 2025

Inscríbete a la Beta Zenless Zone Zero, Condiciones y Requisitos15 abril 2025 -

Mommy Long Legs vs Huggy Wuggy (from Poppy Playtime 2)15 abril 2025

Mommy Long Legs vs Huggy Wuggy (from Poppy Playtime 2)15 abril 2025 -

PARAÍSO DO EDUCANDO: Tabuleiro de Xadrez15 abril 2025

PARAÍSO DO EDUCANDO: Tabuleiro de Xadrez15 abril 2025 -

Lista de episódios de JoJo's Bizarre Adventure - JoJo's Bizarre15 abril 2025

Lista de episódios de JoJo's Bizarre Adventure - JoJo's Bizarre15 abril 2025 -

Alphabet Lore Plush Toys,Alphabet Lore Stuffed Animal Plush Doll,Alphabet Lore Plushies Toys for Fans Birthday Thanksgiving Christmas15 abril 2025

Alphabet Lore Plush Toys,Alphabet Lore Stuffed Animal Plush Doll,Alphabet Lore Plushies Toys for Fans Birthday Thanksgiving Christmas15 abril 2025 -

MD info Solutions Games15 abril 2025

-

Game Of Life Images – Browse 55 Stock Photos, Vectors, and Video15 abril 2025

Game Of Life Images – Browse 55 Stock Photos, Vectors, and Video15 abril 2025 -

Scholastic Book Fair15 abril 2025

Scholastic Book Fair15 abril 2025 -

Every confirmed map in Counter-Strike 215 abril 2025

Every confirmed map in Counter-Strike 215 abril 2025