Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

Por um escritor misterioso

Last updated 25 abril 2025

Efficiently Scale LLM Training Across a Large GPU Cluster with Alpa and Ray

Breaking MLPerf Training Records with NVIDIA H100 GPUs

News Posts matching 'NVIDIA H100

Nvidia sweeps AI benchmarks, but Intel brings meaningful competition

Nvidia has gone mad! Invest in three generative AI unicorns in a row, plus 5nm production capacity with TSMC

NVIDIA H100 Tensor Core GPU Dominates MLPerf v3.0 Benchmark Results

The Story Behind CoreWeave's Rumored Rise to a $5-$8B Valuation, Up From $2B in April

Hagay Lupesko on LinkedIn: Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave…

Beating SOTA Inference Performance on NVIDIA GPUs with GPUNet

AMD Has a GPU to Rival Nvidia's H100

NVIDIA's H100 GPUs & The AI Frenzy; a Rundown of Current Situation

Intel and Nvidia Square Off in GPT-3 Time Trials - IEEE Spectrum

Recomendado para você

-

Nvidia GeForce vs AMD Radeon GPUs in 2023 (Benchmarks & Comparison)25 abril 2025

Nvidia GeForce vs AMD Radeon GPUs in 2023 (Benchmarks & Comparison)25 abril 2025 -

No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog25 abril 2025

No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog25 abril 2025 -

Training LLMs with AMD MI250 GPUs and MosaicML25 abril 2025

Training LLMs with AMD MI250 GPUs and MosaicML25 abril 2025 -

Best GPUs in 2023: Our top graphics card picks25 abril 2025

Best GPUs in 2023: Our top graphics card picks25 abril 2025 -

AMD vs Nvidia in 2023: who is the graphics card champion?25 abril 2025

AMD vs Nvidia in 2023: who is the graphics card champion?25 abril 2025 -

2nd-gen 2023 Fire TV Stick 4K & 4K Max Benchmarks — Compared to all Fire TVs and Google/Android TV Devices25 abril 2025

2nd-gen 2023 Fire TV Stick 4K & 4K Max Benchmarks — Compared to all Fire TVs and Google/Android TV Devices25 abril 2025 -

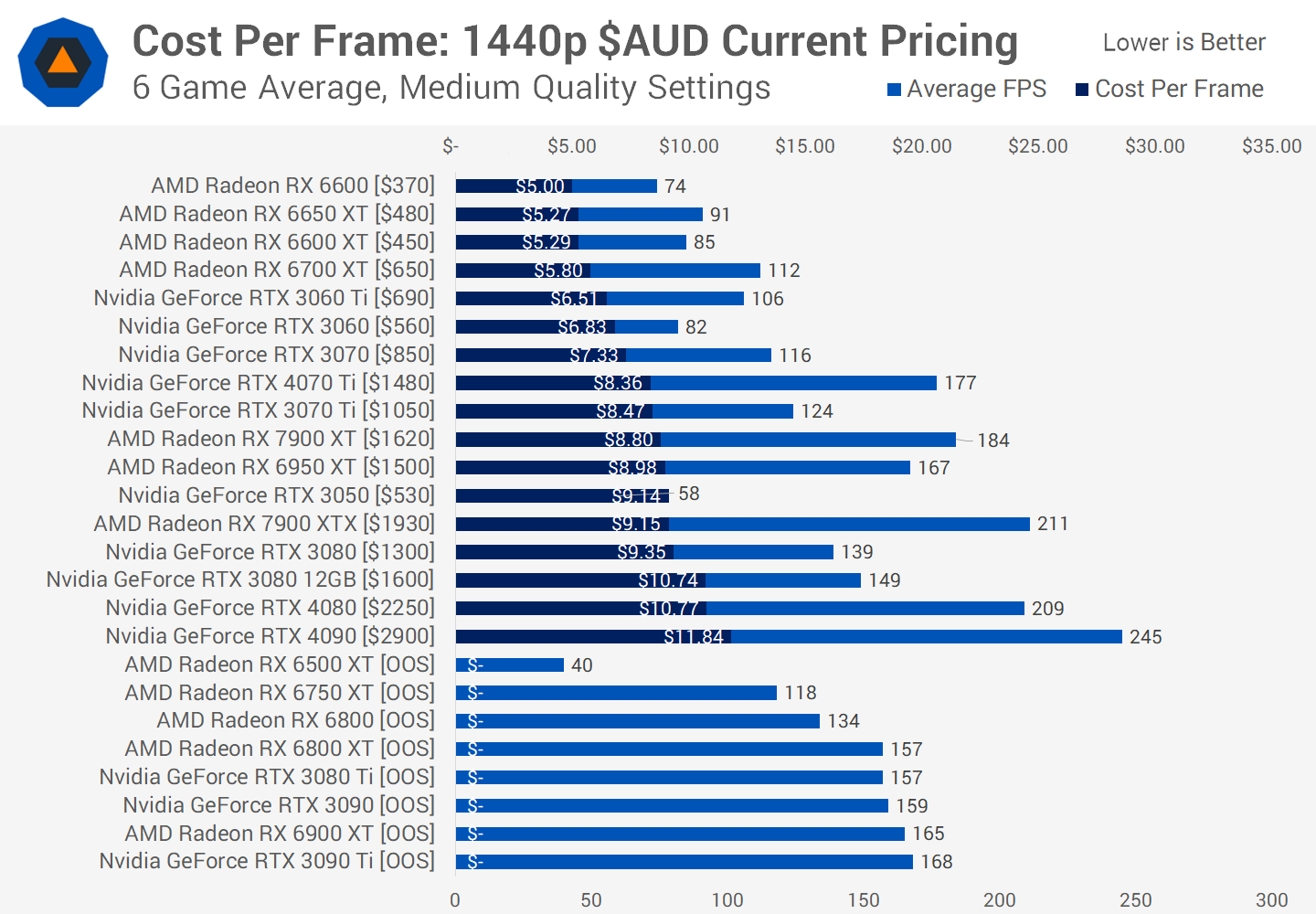

Cost Per Frame: Best Value Graphics Cards in Early 202325 abril 2025

Cost Per Frame: Best Value Graphics Cards in Early 202325 abril 2025 -

Best Graphics Cards for 4K Gaming in 2023 - GeekaWhat25 abril 2025

Best Graphics Cards for 4K Gaming in 2023 - GeekaWhat25 abril 2025 -

Best Graphics Cards 2023 - Top Gaming GPUs for the Money25 abril 2025

Best Graphics Cards 2023 - Top Gaming GPUs for the Money25 abril 2025 -

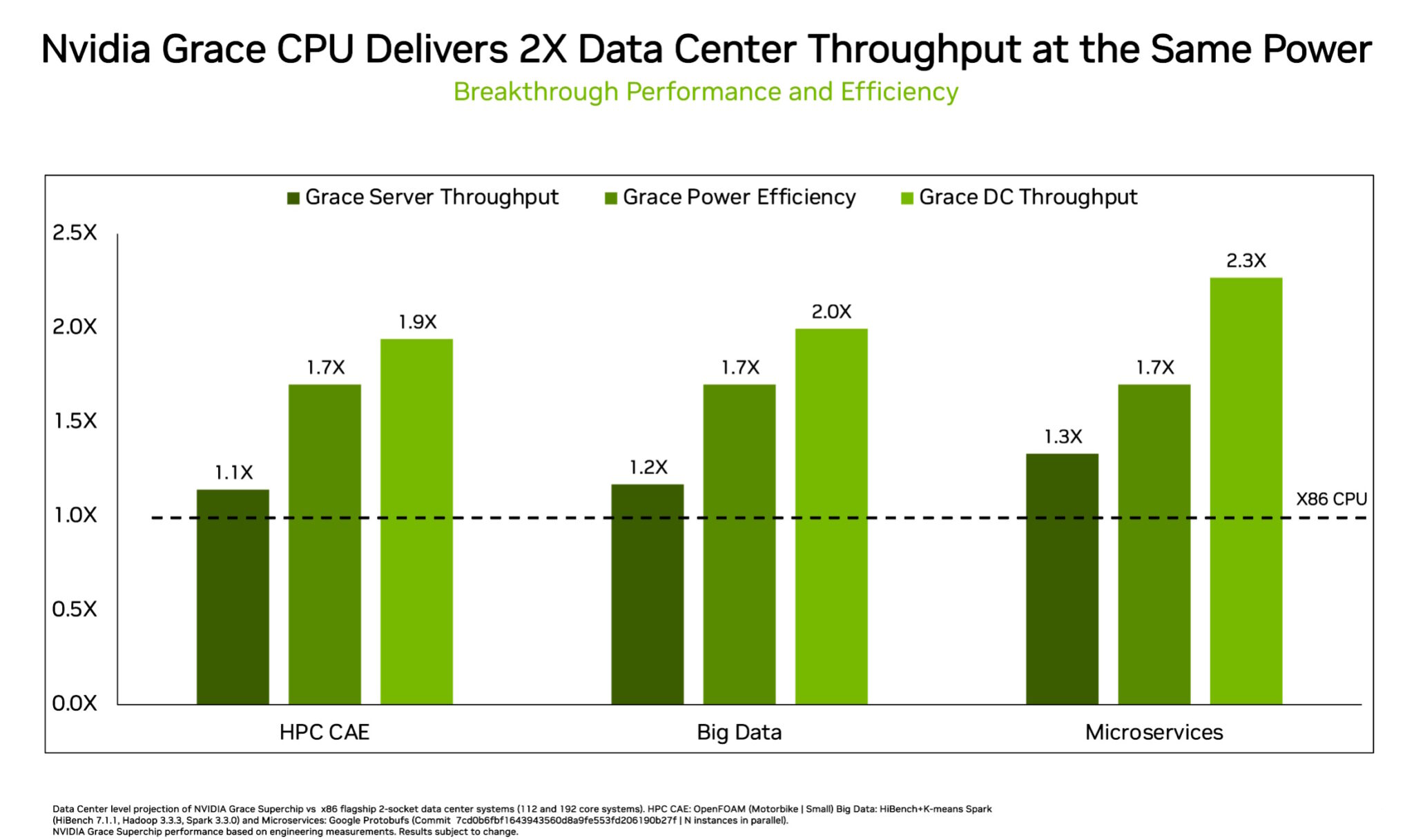

Grace CPU Brings Energy Efficiency to Data Centers25 abril 2025

Grace CPU Brings Energy Efficiency to Data Centers25 abril 2025

você pode gostar

-

Steam recebe 9 novos jogos gratuitos; confira como resgatar de graça para PC25 abril 2025

Steam recebe 9 novos jogos gratuitos; confira como resgatar de graça para PC25 abril 2025 -

Cheats - POKEMON: The Complete hoenn pokedex25 abril 2025

Cheats - POKEMON: The Complete hoenn pokedex25 abril 2025 -

Comunidade Steam :: I Was a Teenage Exocolonist25 abril 2025

Comunidade Steam :: I Was a Teenage Exocolonist25 abril 2025 -

/i.s3.glbimg.com/v1/AUTH_08fbf48bc0524877943fe86e43087e7a/internal_photos/bs/2021/p/4/dqbrCSQr29DRUwJm89QQ/2016-06-14-whatsapp1.jpg) Agora é oficial! Dicionário inclui 25 gírias de Internet de BFF a OMG25 abril 2025

Agora é oficial! Dicionário inclui 25 gírias de Internet de BFF a OMG25 abril 2025 -

Best Performance Smartphone, motorola edge 40 pro25 abril 2025

-

Jogo fifa19 Casas Bahia25 abril 2025

Jogo fifa19 Casas Bahia25 abril 2025 -

th?q=2023 2023 Witch and wizard manga download torrent - bvasl11de22.xn--7-7sbcqam5d.xn--p1ai25 abril 2025

-

![Read Domestic na Kanojo Manga English [New Chapters] Online Free](https://cdn.mangaclash.com/manga_5f13a6cd48a49/cd7073930626d9c5f31d750706a4d198/2.jpg) Read Domestic na Kanojo Manga English [New Chapters] Online Free25 abril 2025

Read Domestic na Kanojo Manga English [New Chapters] Online Free25 abril 2025 -

Talheres De Madeira De Oliveira Espanhol Tradicional Garfo Colher25 abril 2025

Talheres De Madeira De Oliveira Espanhol Tradicional Garfo Colher25 abril 2025 -

JOGO DA COBRINHA - Slither.io COBRINHA DE UM OLHO SÓ + Pontos25 abril 2025

JOGO DA COBRINHA - Slither.io COBRINHA DE UM OLHO SÓ + Pontos25 abril 2025