Policy or Value ? Loss Function and Playing Strength in AlphaZero-like Self-play

Por um escritor misterioso

Last updated 16 abril 2025

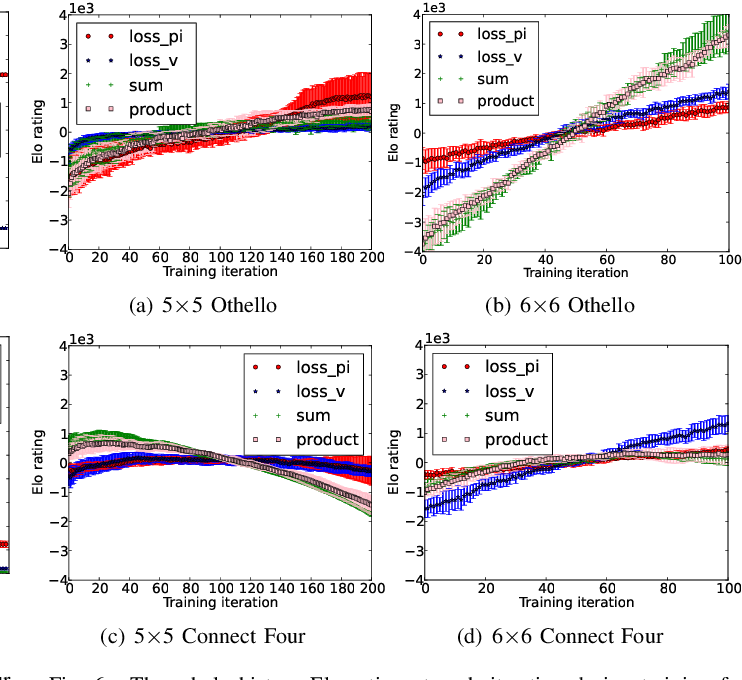

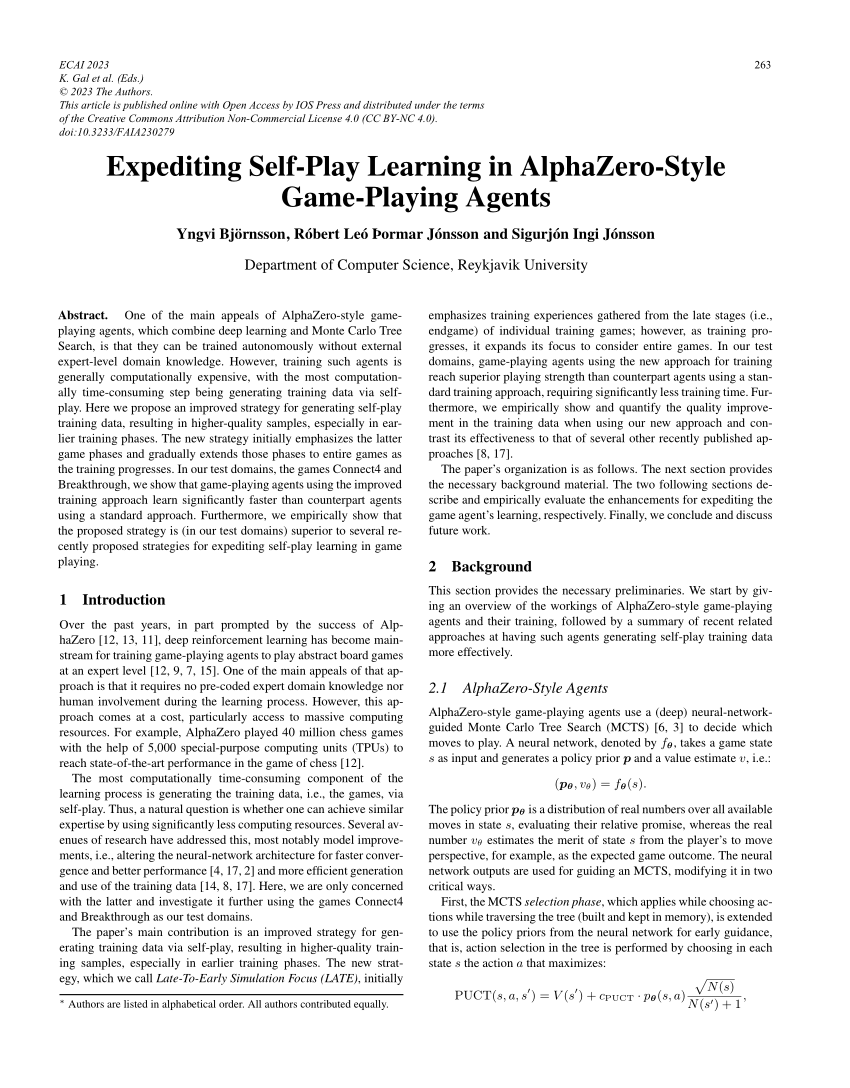

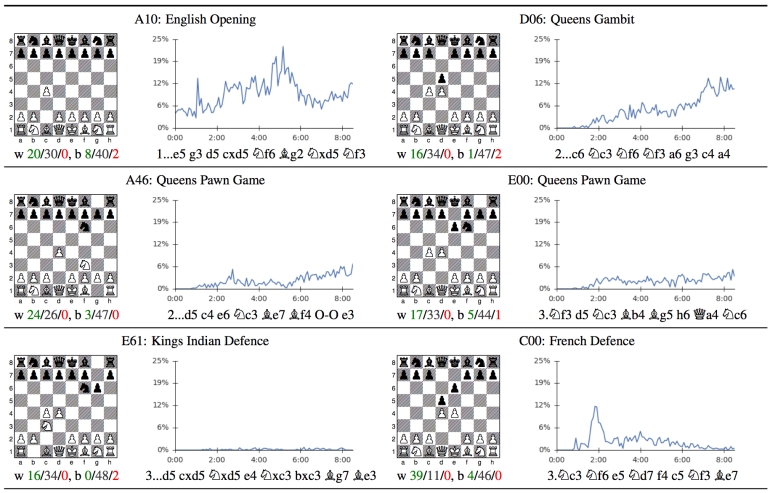

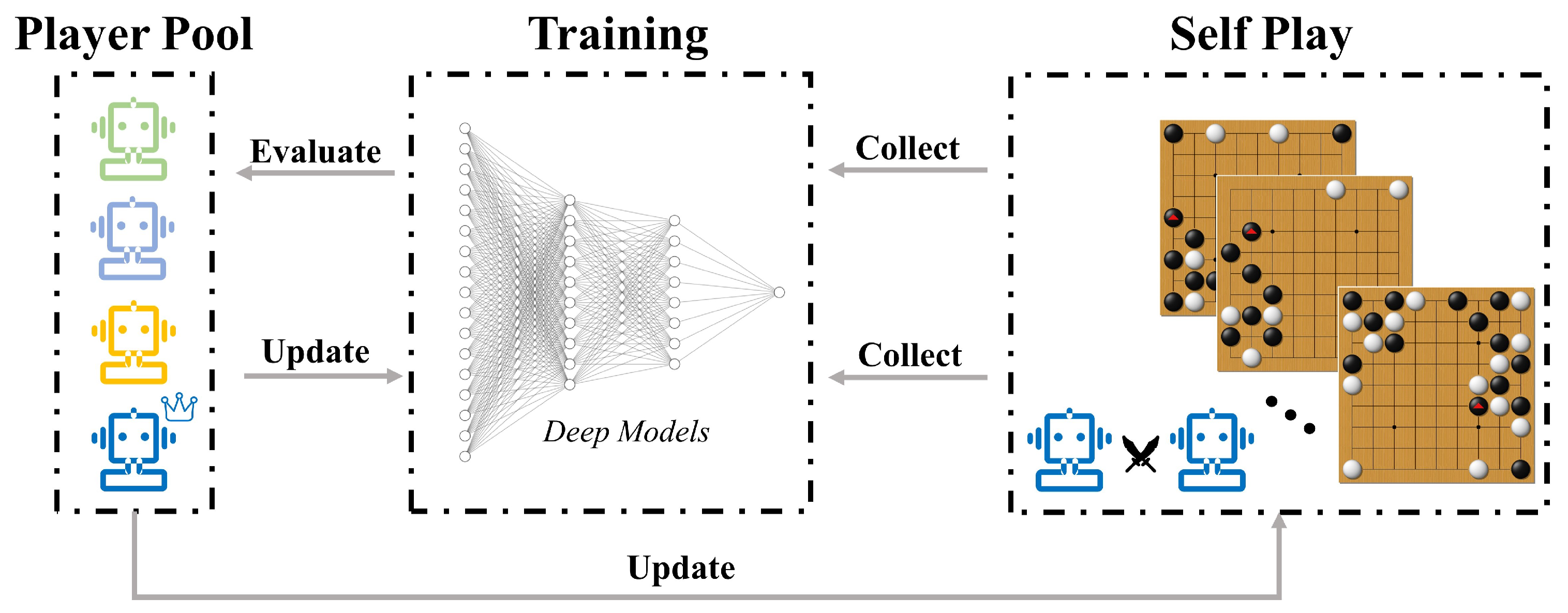

Results indicate that, at least for relatively simple games such as 6x6 Othello and Connect Four, optimizing the sum, as AlphaZero does, performs consistently worse than other objectives, in particular by optimizing only the value loss. Recently, AlphaZero has achieved outstanding performance in playing Go, Chess, and Shogi. Players in AlphaZero consist of a combination of Monte Carlo Tree Search and a Deep Q-network, that is trained using self-play. The unified Deep Q-network has a policy-head and a value-head. In AlphaZero, during training, the optimization minimizes the sum of the policy loss and the value loss. However, it is not clear if and under which circumstances other formulations of the objective function are better. Therefore, in this paper, we perform experiments with combinations of these two optimization targets. Self-play is a computationally intensive method. By using small games, we are able to perform multiple test cases. We use a light-weight open source reimplementation of AlphaZero on two different games. We investigate optimizing the two targets independently, and also try different combinations (sum and product). Our results indicate that, at least for relatively simple games such as 6x6 Othello and Connect Four, optimizing the sum, as AlphaZero does, performs consistently worse than other objectives, in particular by optimizing only the value loss. Moreover, we find that care must be taken in computing the playing strength. Tournament Elo ratings differ from training Elo ratings—training Elo ratings, though cheap to compute and frequently reported, can be misleading and may lead to bias. It is currently not clear how these results transfer to more complex games and if there is a phase transition between our setting and the AlphaZero application to Go where the sum is seemingly the better choice.

Reimagining Chess with AlphaZero, February 2022

Representation Matters: The Game of Chess Poses a Challenge to

Policy or Value ? Loss Function and Playing Strength in AlphaZero

Value targets in off-policy AlphaZero: a new greedy backup

AlphaGo/AlphaGoZero/AlphaZero/MuZero: Mastering games using

PDF) Expediting Self-Play Learning in AlphaZero-Style Game-Playing

Acquisition of chess knowledge in AlphaZero

Policy or Value ? Loss Function and Playing Strength in AlphaZero

Multiplayer AlphaZero – arXiv Vanity

Does the neural net of AlphaZero only evaluate the score of a

Simple Alpha Zero

Recomendado para você

-

Acquisition of chess knowledge in AlphaZero16 abril 2025

Acquisition of chess knowledge in AlphaZero16 abril 2025 -

AlphaZero - Wikipedia16 abril 2025

AlphaZero - Wikipedia16 abril 2025 -

Deepmind's AlphaZero Plays Chess16 abril 2025

Deepmind's AlphaZero Plays Chess16 abril 2025 -

DeepMind's AlphaZero crushes chess16 abril 2025

DeepMind's AlphaZero crushes chess16 abril 2025 -

![R] Understanding AlphaZero Neural Network's SuperHuman Chess Ability (Summary of the Paper 'Acquisition of Chess Knowledge in AlphaZero') : r/MachineLearning](https://preview.redd.it/096omb8m10681.png?width=808&format=png&auto=webp&s=c375a2bfffc4949399e17c7ebbe2e2c334a2a44d) R] Understanding AlphaZero Neural Network's SuperHuman Chess Ability (Summary of the Paper 'Acquisition of Chess Knowledge in AlphaZero') : r/MachineLearning16 abril 2025

R] Understanding AlphaZero Neural Network's SuperHuman Chess Ability (Summary of the Paper 'Acquisition of Chess Knowledge in AlphaZero') : r/MachineLearning16 abril 2025 -

Electronics, Free Full-Text16 abril 2025

Electronics, Free Full-Text16 abril 2025 -

AlphaZero from Scratch – Machine Learning Tutorial16 abril 2025

AlphaZero from Scratch – Machine Learning Tutorial16 abril 2025 -

DeepMind's AlphaGo Zero and AlphaZero16 abril 2025

DeepMind's AlphaGo Zero and AlphaZero16 abril 2025 -

AlphaZero-Inspired Game Learning: Faster Training by Using MCTS Only at Test Time16 abril 2025

AlphaZero-Inspired Game Learning: Faster Training by Using MCTS Only at Test Time16 abril 2025 -

AI Summary: Finding Increasingly Large Extremal Graphs with AlphaZero and Tabu Search16 abril 2025

AI Summary: Finding Increasingly Large Extremal Graphs with AlphaZero and Tabu Search16 abril 2025

você pode gostar

-

Desenho De Procure Por Um Jogo Figuras Desenhos Animados Idênticos Em Uma Página Livro Para Colorir Vetor PNG , Desenho De Carro, Desenho De Desenho Animado, Desenho De Livro Imagem PNG e16 abril 2025

Desenho De Procure Por Um Jogo Figuras Desenhos Animados Idênticos Em Uma Página Livro Para Colorir Vetor PNG , Desenho De Carro, Desenho De Desenho Animado, Desenho De Livro Imagem PNG e16 abril 2025 -

DREAM WORLD by: Mustafa Avşaroğlu16 abril 2025

DREAM WORLD by: Mustafa Avşaroğlu16 abril 2025 -

500 Hillock Stock Pictures, Editorial Images and Stock Photos16 abril 2025

500 Hillock Stock Pictures, Editorial Images and Stock Photos16 abril 2025 -

Cookie Clicker - Como Instalar Mods na Steam (Tutorial)(BÔNUS)16 abril 2025

Cookie Clicker - Como Instalar Mods na Steam (Tutorial)(BÔNUS)16 abril 2025 -

Car Driving Game on Google Maps16 abril 2025

Car Driving Game on Google Maps16 abril 2025 -

Nova parceria! Thor e Valkyrie serão as estrelas de reboot de16 abril 2025

Nova parceria! Thor e Valkyrie serão as estrelas de reboot de16 abril 2025 -

Bomberman Online (Remastered) by MTYMAC on DeviantArt16 abril 2025

Bomberman Online (Remastered) by MTYMAC on DeviantArt16 abril 2025 -

Classroom of the Elite 2nd Season16 abril 2025

Classroom of the Elite 2nd Season16 abril 2025 -

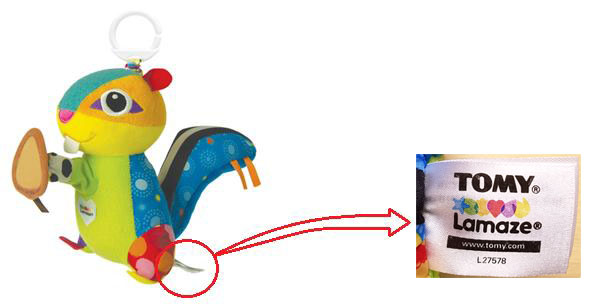

TOMY Recalls Munching Max Chipmunk Toys Due to Laceration Hazard16 abril 2025

TOMY Recalls Munching Max Chipmunk Toys Due to Laceration Hazard16 abril 2025 -

Carros Rebaixados Online - CRO APK for Android Download16 abril 2025

Carros Rebaixados Online - CRO APK for Android Download16 abril 2025