optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange

Por um escritor misterioso

Last updated 11 abril 2025

I have a function,

$$f(\mathbf{x})=x_1^2+4x_2^2-4x_1-8x_2,$$

which can also be expressed as

$$f(\mathbf{x})=(x_1-2)^2+4(x_2-1)^2-8.$$

I've deduced the minimizer $\mathbf{x^*}$ as $(2,1)$ with $f^*

Energies, Free Full-Text

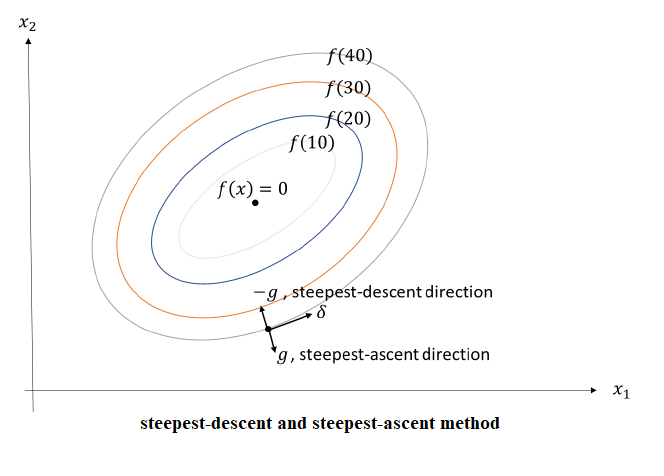

Steepest Descent Methods - NM EDUCATION

optimization - Steepest Descent in elliptical error surface - Mathematics Stack Exchange

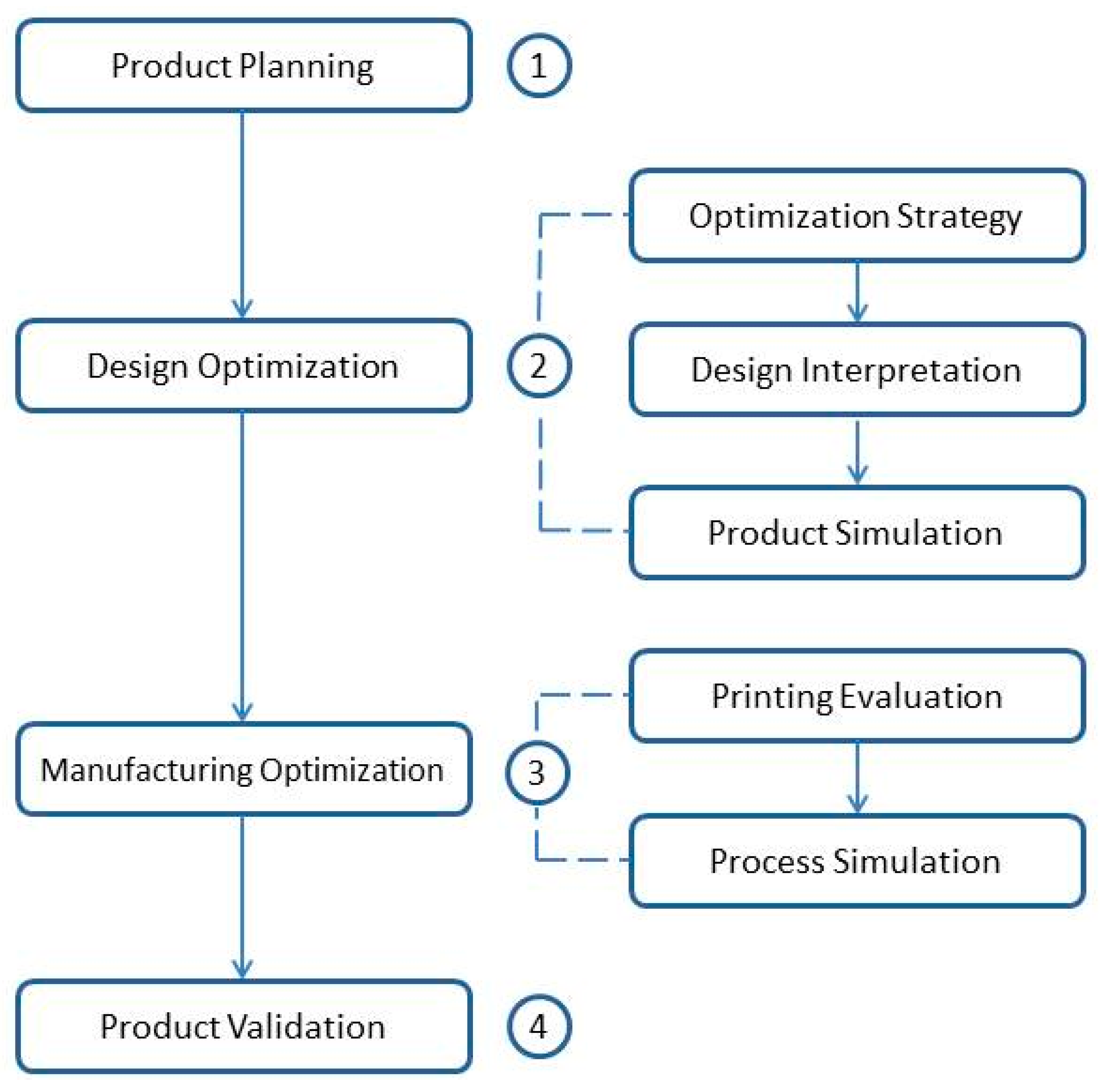

Applied Sciences, Free Full-Text

nonlinear optimization - Do we need steepest descent methods, when minimizing quadratic functions? - Mathematics Stack Exchange

Steepest Descent Method - an overview

Nonlinear programming - ppt download

Reference Request: Introduction to step-size complexity of optimization algorithms - Mathematics Stack Exchange

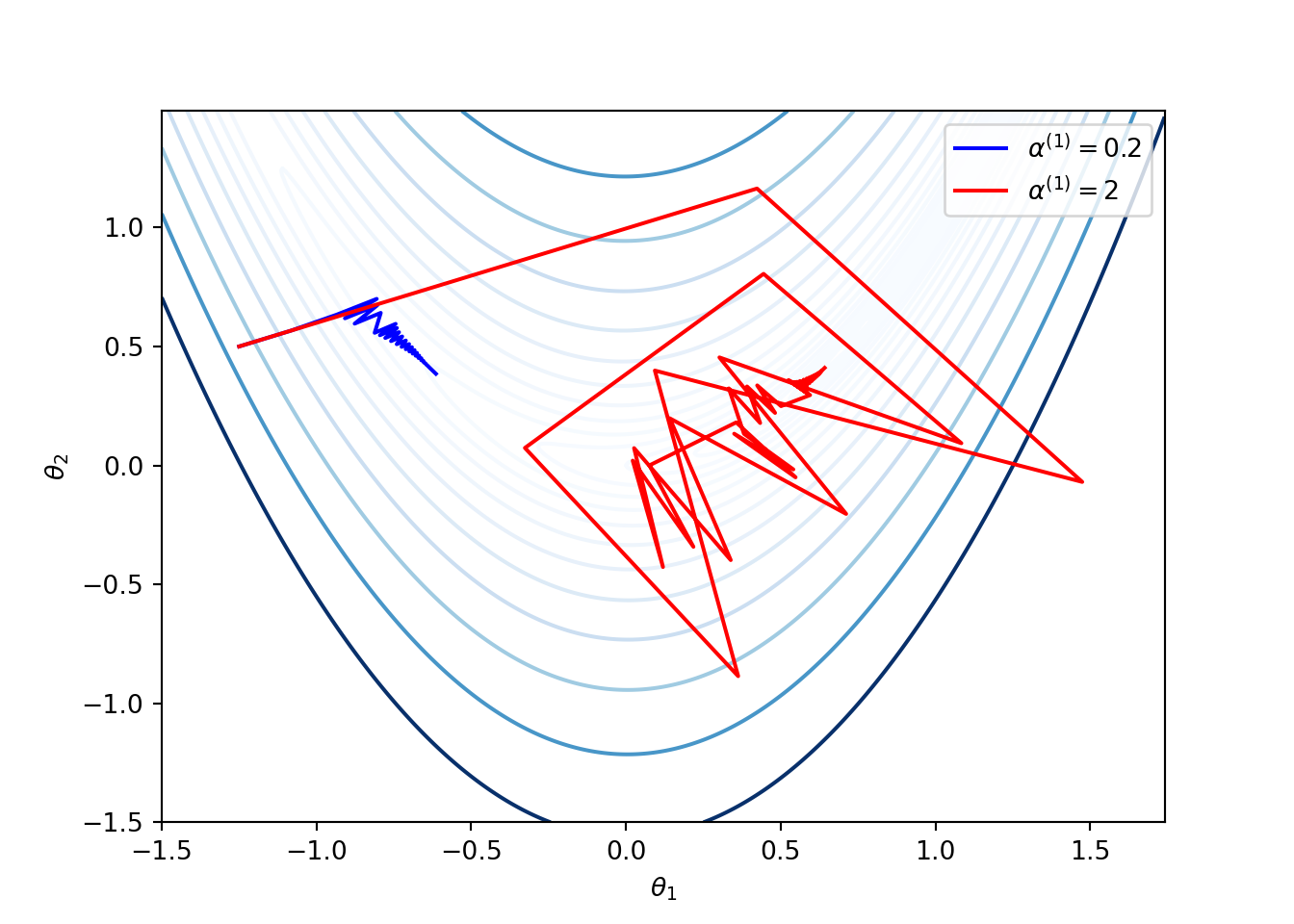

3 Optimization Algorithms The Mathematical Engineering of Deep Learning (2021)

Unconstrained Nonlinear Optimization Algorithms - MATLAB & Simulink - MathWorks Nordic

machine learning - Does gradient descent always converge to an optimum? - Data Science Stack Exchange

Recomendado para você

-

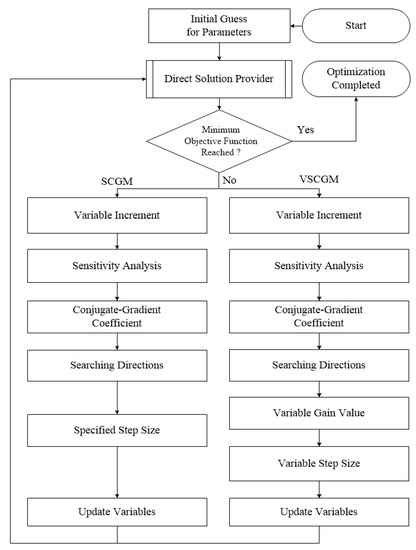

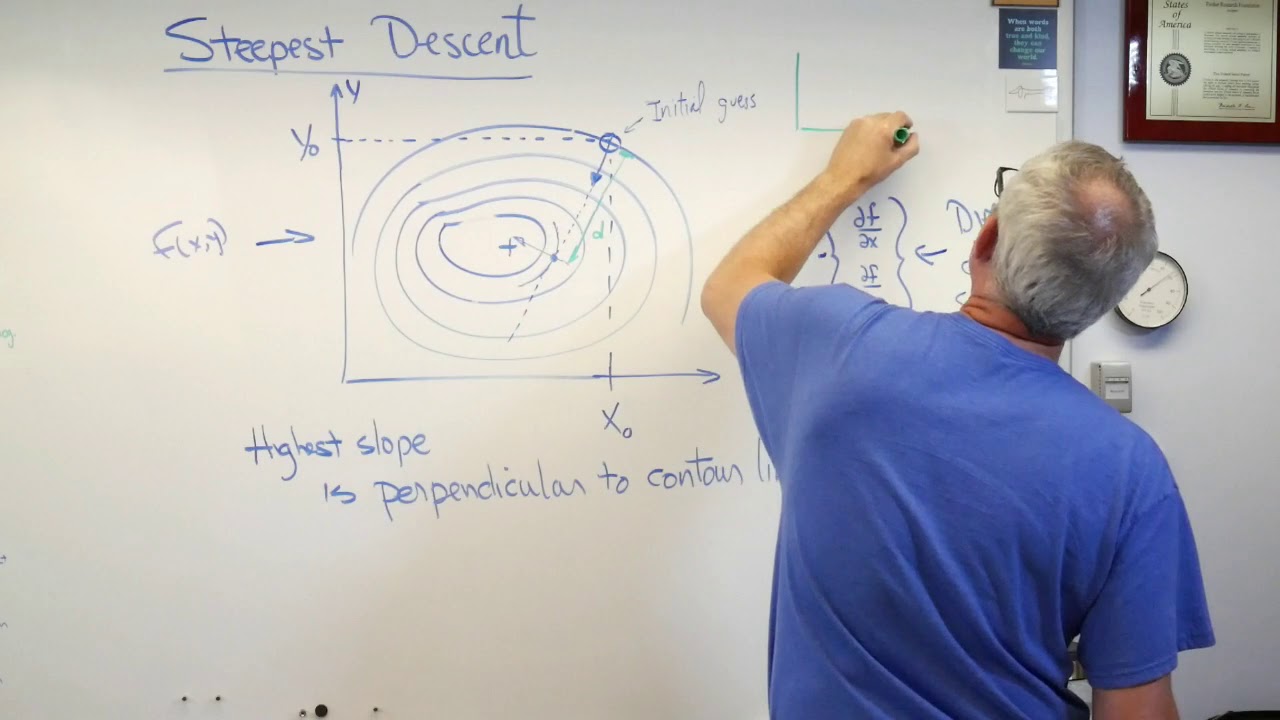

Steepest Descent Method Search Technique11 abril 2025

Steepest Descent Method Search Technique11 abril 2025 -

Steepest Descent Method - an overview11 abril 2025

Steepest Descent Method - an overview11 abril 2025 -

Applied Optimization - Steepest Descent11 abril 2025

Applied Optimization - Steepest Descent11 abril 2025 -

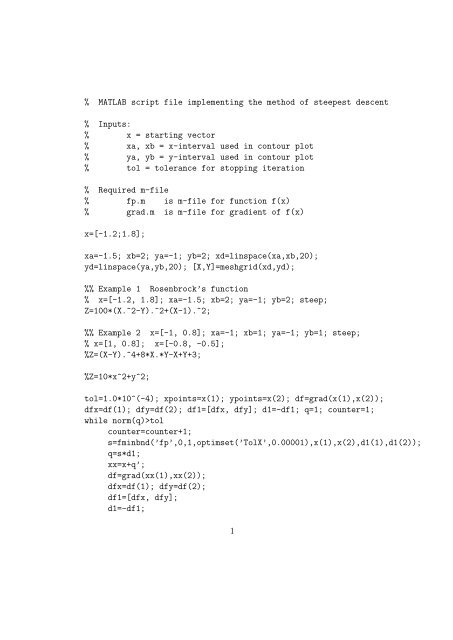

MATLAB script file implementing the method of steepest descent11 abril 2025

MATLAB script file implementing the method of steepest descent11 abril 2025 -

7: An example of steepest descent optimization steps.11 abril 2025

7: An example of steepest descent optimization steps.11 abril 2025 -

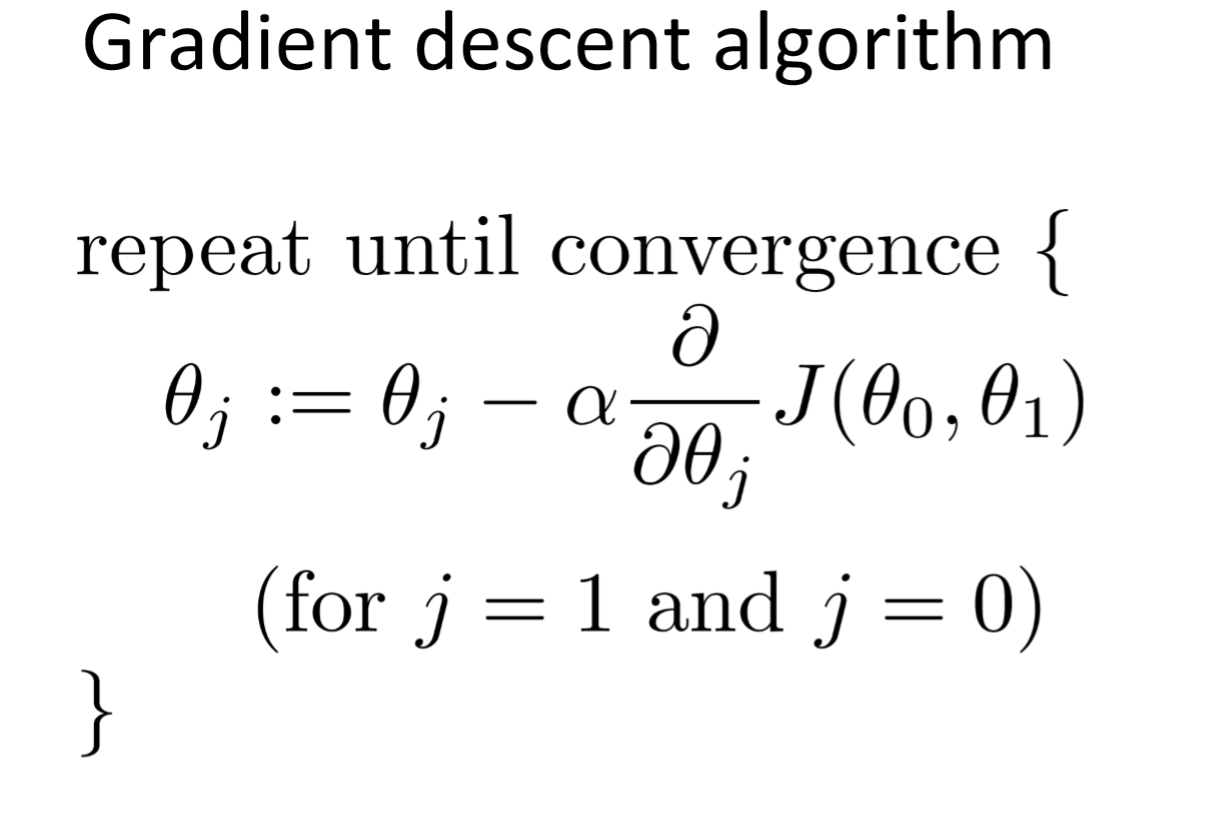

Using the Gradient Descent Algorithm in Machine Learning, by Manish Tongia11 abril 2025

Using the Gradient Descent Algorithm in Machine Learning, by Manish Tongia11 abril 2025 -

Chapter 4 Line Search Descent Methods Introduction to Mathematical Optimization11 abril 2025

Chapter 4 Line Search Descent Methods Introduction to Mathematical Optimization11 abril 2025 -

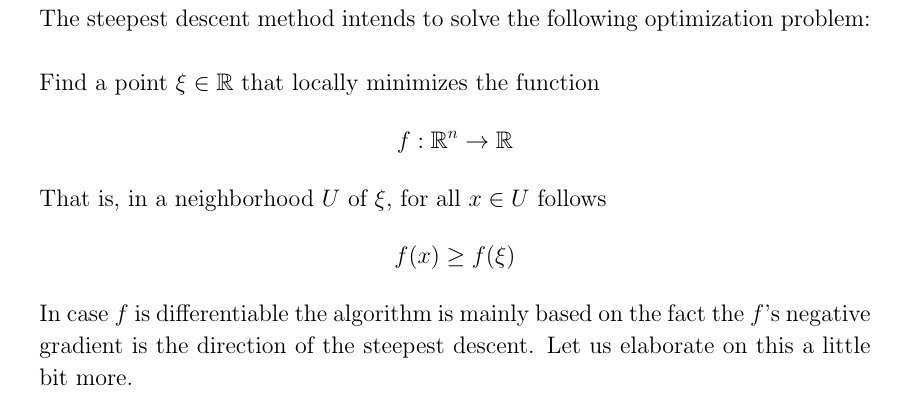

PDF) Steepest Descent juan meza11 abril 2025

PDF) Steepest Descent juan meza11 abril 2025 -

Steepest descent method in sc11 abril 2025

Steepest descent method in sc11 abril 2025 -

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding11 abril 2025

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding11 abril 2025

você pode gostar

-

O MELHOR jogador do Mundo de 202311 abril 2025

O MELHOR jogador do Mundo de 202311 abril 2025 -

Ghost of Tsushima Director's Cut: Iki island, release date, leaks, more - Dexerto11 abril 2025

Ghost of Tsushima Director's Cut: Iki island, release date, leaks, more - Dexerto11 abril 2025 -

MEME REDRAW) KONO DIO DA! by AlphaPrime1000 on DeviantArt11 abril 2025

MEME REDRAW) KONO DIO DA! by AlphaPrime1000 on DeviantArt11 abril 2025 -

Build de Samurai em Elden Ring: katanas, sangramento e muita11 abril 2025

Build de Samurai em Elden Ring: katanas, sangramento e muita11 abril 2025 -

Ao Oni 3 English Translation Part 111 abril 2025

Ao Oni 3 English Translation Part 111 abril 2025 -

Boneco Majin Boo em Promoção na Americanas11 abril 2025

Boneco Majin Boo em Promoção na Americanas11 abril 2025 -

Eleições Sistema Cofecon/Corecons: Conheça as chapas inscritas – Conselho Federal de Economia – COFECON11 abril 2025

Eleições Sistema Cofecon/Corecons: Conheça as chapas inscritas – Conselho Federal de Economia – COFECON11 abril 2025 -

Instagram, PDF, Hashtag11 abril 2025

-

Read Naruto: Surgeon Of Death In Boruto - Lame_craze - WebNovel11 abril 2025

-

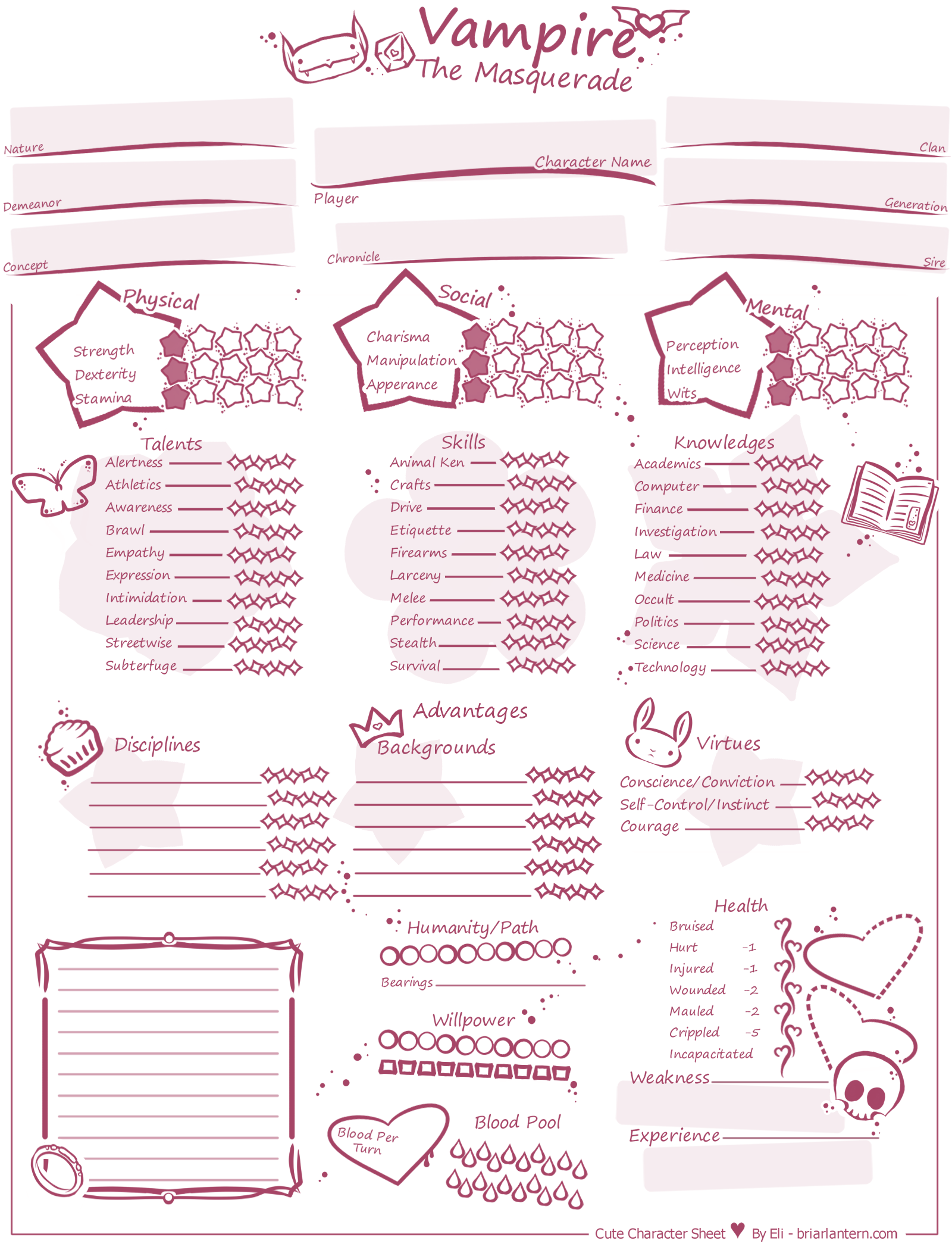

VampiretheMasqueradeCute.png11 abril 2025

VampiretheMasqueradeCute.png11 abril 2025